WebRTC vs WebSockets: They. Are. Not. The. Same.

Sometimes, there are things that seem obvious once you’re “in the know” but just isn’t that when you’re new to the topic. It seems that the difference between WebRTC vs WebSockets is one such thing. Philipp Hancke pinged me the other day, asking if I have an article about WebRTC vs WebSockets, and I didn’t – it made no sense for me. That at least, until I asked Google about it:

It seems like Google believes the most pressing (and popular) search for comparisons of WebRTC is between WebRTC and WebSockets. I should probably also write about them other comparisons there, but for now, let’s focus on that first one.

Need to learn WebRTC? Check out my online course – the first module is free.

What are WebSockets?

WebSockets are a bidirectional mechanism for browser communication.

There are two types of transport channels for communication in browsers: HTTP and WebSockets.

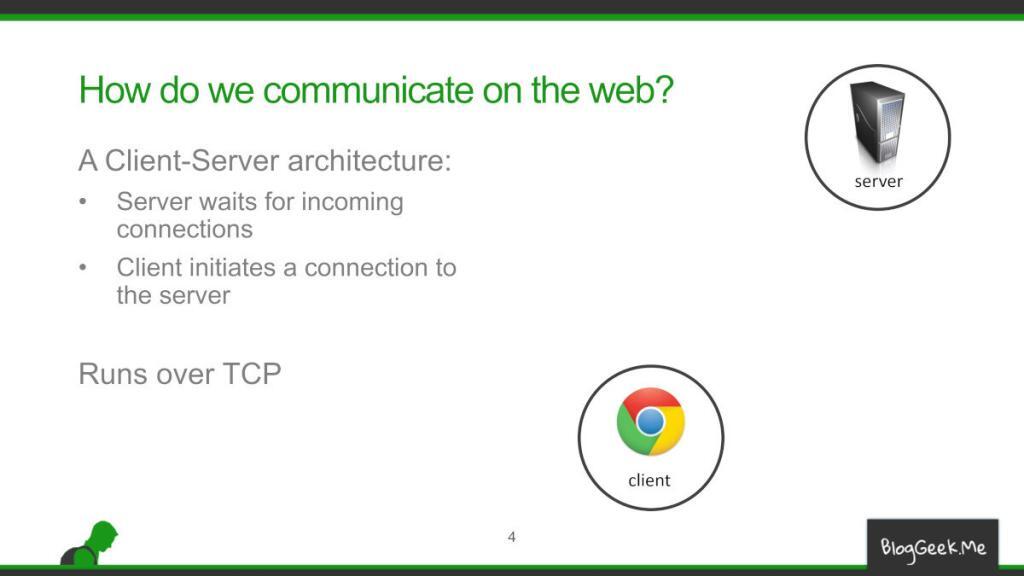

HTTP is what gets used to fetch web pages, images, stylesheets and javascript files as well as other resources. In essence, HTTP is a client-server protocol, where the browser is the client and the web server is the server:

My WebRTC course covers this in detail, but suffice to say here that with HTTP, your browser connects to a web server and requests *something* of it. The server then sends a response to that request and that’s the end of it.

The challenge starts when you want to send an unsolicited message from the server to the client. You can’t do it if you don’t send a request from the web browser to the web server, and while you can use different schemes such as XHR and SSE to do that, they end up feeling like hacks or workarounds more than solutions.

Enter WebSockets, what’s meant to solve exactly that – the web browser connects to the web server by establishing a WebSocket connection. Over that connection, both the browser and the server can send each other unsolicited messages.

Because WebSockets are built-for-purpose and not the alternative XHR/SSE hacks, WebSockets perform better both in terms of speed and resources it eats up on both browsers and servers.

WebSockets are rather simple to use as a web developer – you’ve got a straightforward WebSocket API for them, which are nicely illustrated by HPBN:

var ws = new WebSocket('wss://example.com/socket');

ws.onerror = function (error) { ... }

ws.onclose = function () { ... }

ws.onopen = function () {

ws.send("Connection established. Hello server!");

}

ws.onmessage = function(msg) {

if(msg.data instanceof Blob) {

processBlob(msg.data);

} else {

processText(msg.data);

}

}You’ve got calls for send and close and callbacks for onopen, onerror, onclose and onmessage. Of course there’s more to it than that, but this is holds the essence of WebSockets.

It leads us to what we usually use WebSockets for, and I’d like to explain it this time not by actual scenarios and use cases but rather by the keywords I’ve seen associated with WebSockets:

- Bi-directional, full-duplex

- Signaling

- Real-time data transfer

- Low latency

- Interactive

- High performance

- Chat, two way conversation

Funnily, a lot of this sometimes get associated with WebRTC as well, which might be the cause of the comparison that is made between the two.

WebRTC, in the context of WebSockets

There are numerous articles here about WebRTC, including a What is WebRTC one.

In the context of WebRTC vs WebSockets, WebRTC enables sending arbitrary data across browsers without the need to relay that data through a server (most of the time). That data can be voice, video or just data.

Here’s where things get interesting –

WebRTC has no signaling channel

When starting a WebRTC session, you need to negotiate the capabilities for the session and the connection itself. That is done out of the scope of WebRTC, in whatever means you deem fit. And in a browser, this can either be HTTP or… WebSocket.

So from this point of view, WebSocket isn’t a replacement to WebRTC but rather complementary – as an enabler.

You can send media over a WebSocket

Sort of.

I’ll start with an example. If you want you connect to a cloud based speech to text API and you happen to use IBM Watson, then you can use its WebSocket interface. The first sentence in the first paragraph of the documentation?

The WebSocket interface of the Speech to Text service is the most natural way for a client to interact with the service.

So. you stream the speech (=voice) over a WebSocket to connect it to the cloud API service.

That said, it is highly unlikely to be used for anything else.

In most cases, real time media will get sent over WebRTC or other protocols such as RTSP, RTMP, HLS, etc.

WebRTC’s data channel

WebRTC has a data channel. It has many different uses. In some cases, it is used in place of using a kind of a WebSocket connection:

The illustration above shows how a message would pass from one browser to another over a WebSocket versus doing the same over a WebRTC data channel. Each has its advantages and challenges.

Funnily, the data channel in WebRTC shares a similar set of APIs to the WebSocket ones:

const peerConnection = new RTCPeerConnection();

const dataChannel =

peerConnection.createDataChannel("myLabel", dataChannelOptions);

dataChannel.onerror = (error) => { … };

dataChannel.onclose = () => { … };

dataChannel.onopen = () => {

dataChannel.send("Hello World!");

};

dataChannel.onmessage = (event) => { … };Again, we’ve got calls for send and close and callbacks for onopen, onerror, onclose and onmessage.

This makes an awful lot of sense but can be confusing a bit.

There this one tiny detail – to get the data channel working, you first need to negotiate the connection. And that you do either with HTTP or with a WebSocket.

When should you use WebRTC instead of a WebSocket?

Almost never. That’s the truth.

If you’re contemplating between the two and you don’t know a lot about WebRTC, then you’re probably in need of WebSockets, or will be better off using WebSockets.

I’d think of data channels either when there are things you want to pass directly across browsers without any server intervention in the message itself (and these use cases are quite scarce), or you are in need of a low latency messaging solution across browsers where a relay via a WebSocket will be too time consuming.

Other quick questions about WebSockets and WebRTC

While both are part of the HTML5 specification, WebSockets are meant to enable bidirectional communication between a browser and a web server and WebRTC is meant to offer real time communication between browsers (predominantly voice and video communications).

There are a few areas where WebRTC can be said to replace WebSockets, but these aren’t too common.

Yes and no.

WebRTC doesn’t use WebSockets. It has its own set of protocols including SRTP, TURN, STUN, DTLS, SCTP, …

The thing is that WebRTC has no signaling of its own and this is necessary in order to open a WebRTC peer connection. This is achieved by using other transport protocols such as HTTPS or secure WebSockets. In that regard, WebSockets are widely used in WebRTC applications.

No.

To connect a WebRTC data channel you first need to signal the connection between the two browsers. To do that, you need them to communicate through a web server in some way. This is achieved by using a secure WebSocket or HTTPS. So WebRTC can’t really replace WebSockets.

Now, once the connection is established between the two peers over WebRTC, you can start sending your messages directly over the WebRTC data channel instead of routing these messages through a server. In a way, this replaces the need for WebSockets at this stage of the communications. It enables lower latency and higher privacy since the web server is no longer involved in the communication.

Need to learn WebRTC? Check out my online course – the first module is free.

They’re quite different in the way they work but basically:

* WebSockets were built for sending data in real time between the client and server. Websockets can easily accommodate media.

* WebRTC was built for sending media peer 2 peer between 2 clients. It can accommodate data.

In some rather specific use cases you could use both, that’s where knowing how they work and what the differences are matters.

Thanks for providing the TL;DR version 😉

Nice post Tsahi; we all get asked these sorts of things in the WebRTC world. Right now the biggest issue with DataChannel is that it needs the “set up” just like WebRTC a/v does which requires a signaling mechanism; the old chicken before the egg scenario. It would be nice if all browsers supported DataChannel in a similar way or at all as well, but I guess we’ll get there someday. For now, I’ll stick with WebSockets…

Paul,

It isn’t an either-or thing. I don’t think there’s much room for the data channel in the broadcasting uses cases that you have, and with the coming of QUIC into the game, it won’t be needed for low latency delivery between client and server either. It has its place for direct browser to browser communications.

it worth mentioning that ZOOM actually sending streaming data using web sockets and not webrtc.

Thanks. It does that strictly in Chrome. Not sure that’s what they’re doing inside their native app, which is 99.9% of their users.

Thanks Tsahi for the post. I have tried webRTC for video streaming and has worked well. But the issue with webRTC is that it has problems in enterprise/corporate setup.

Webrtc uses UDP ports between endpoints for the media transfer (datapath). In many enterprises, the outgoing UDP ports are also closed. So the only way , that looks feasible to me is to transmit media is through http using standard ports (8080 or 443) .

* Is there a way in webRTC to workaround this scenario?

* Do you know of any alternatives?

regards

Vijay

Vijay,

WebRTC uses whatever it can to get connected. This can end up as TCP and TLS over a TURN relay connection. I am in the process of creating a new mini video series on this topic, planning to publish it during July.

I’d suggest you also take a look at my WebRTC course if you are after an in-depth understanding of WebRTC, how to architect your service and what you can and can’t do with WebRTC.

Are these 2 methods packet based, like UDP? It looks like it based on that “onmessage” API. Also are packets reliable or unreliable?

They are both packet based in the sense that they packetize the messages sent through them (WebSockets and WebRTC’s data channel).

UDP isn’t really packet based. It sends out datagrams, which are then paketized per datagram (or something similar).

WebSockets and WebRTC are of a higher level abstraction than UDP. Whatever they use under the hood shouldn’t concern you much since the packetization of messages is something they do for you (with or without the help of the lower layers).

As for reliability, WebSockets are reliable. WebRTC data channels can be either reliable or unreliable, depending on your decision.

How does it works with 2way streaming ..

interactive streams

Open And close functions ..??

Bernd, not sure I understand the questions… can you be more specific, or more descriptive please?

Hi,

Recently I seen one tutorial for ESP32+OV7670 which send video data to smartPhone or other mobile device using websocket.

Seem that in this case websocket can be used instead of webrtc?!

Thnaks

Not sure I follow…

thanks for the page, it helped clarify things for me.

Hi,

Thanks for the post.

Does it makes sense to use WebRTC a replacement of WebSocket when server is behind a NAT and you dont want the user to touch the router?

Imagine a use case where you have many embedded devices distributed in many customers (typically behind a NAT).

E.g. a security camera. You want to give remote control through web (on mobile) to the devices.

The device act as server of data. But a peer of a WebRTC connection to the user browser.

Does it makes sense use WebRTC here to traverse the NAT?

Thanks.

Salvador,

Yes. That’s where a WebRTC data channel would shine.

I wouldn’t view this as a WebSocket replacement simply because WebSocket won’t be a viable alternative here (at least not directly).

So basically when we want an intermediary server in the middle of the 2 clinets we use websockets or else webrtc. And as far as I know we only need a server in the middle if we want to make the chat permanent by storing it in the database, and we dont want it to be permanent then we could use webrtc as it doesnt involve a server in the middle (and this server would encur extra costs and latency) alse webrtc uses udp being lighter than tcp will make it even faster

To send data over WebRTC’s data channel you first need to open a WebRTC connection. You do that (usually) by opening and using a WebSocket.

So I ask you this – if you already spent the time, effort and energy to open that WebSocket and send data over it – does your use case truly needs the benefits of WebRTC’s data channel? If the answer is yes (truly yes) then go do it. Otherwise, just stick with your WebSocket.