WebRTC simulcast and ABR is all about offer choice to “viewers”. (see also glossary: simulcast)

I’ve been dealing recently with more clients who are looking to create live broadcast experiences. Solutions where one or more users have to broadcast their streams from a single session to a large audience.

Large is a somewhat lenient target number, which seems to be stretching from anywhere between 100 to a 1,000,000 viewers. And yes, most of these clients want that viewers will have instantaneous access to the stream(s) – a lag of 1-2 seconds at most, as opposed to the 10 or more seconds of latency you get from HLS.

Simulcast, ABR – need a quick reference to understand their similarities and differences? Download the free cheatsheet:

What I started seeing more and more recently are solutions that make use of ABR. What’s ABR? It is just like simulcast, but… different.

What’s Simulcast?

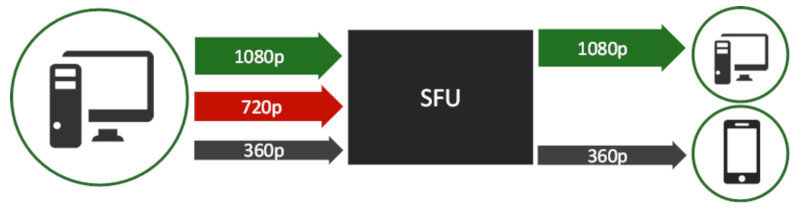

Simulcast is a mechanism in WebRTC by which a device/client/user will be sending a video stream that contains multiple bitrates in it. There is a longform explanation of what is simulcast and what is it good for that you can read if you want to dig deeper here.

With simlucast, a WebRTC client will generate these multiple bitrates, where each offers a different video quality – the higher the bitrate the higher the quality.

These video streams are then received by the SFU, and the SFU can pick and choose which stream to send to which participant/viewer. This decision is usually made based on the available bandwidth, but it can (and should) make use of a lot of other factors as well – display size and video layout on the viewer device, CPU utilization of the viewer, etc.

The great thing about simulcast? The SFU doesn’t work too hard. It just selects what to send where.

What’s ABR?

ABR stands for Adaptive Bitrate Streaming. Don’t ask me why R and not S in the acronym – probably because they didn’t want to mix this with car breaks. Anyways, ABR comes from streaming, long before WebRTC was introduced to our lives.

With streaming, you’ve got a user watching a recorded (or “live”) video online. The server then streams that media towards the user. What happens if the available bitrate from the server to the user is low? Buffering.

Streaming technology uses TCP, which in turn uses retransmissions. It isn’t designed for real-time, and well… we want to SEE the content and would rather wait a bit than not see it at all.

Today, with 1080p and 4K resolutions, streaming at high quality requires lots and lots of bandwidth. If the network isn’t capable, would users rather wait and be buffered or would it be better to just lower the quality?

Most prefer lowering the quality.

But how do you do that with “static” content? A pre-recorded video file is what it is.

You use ABR:

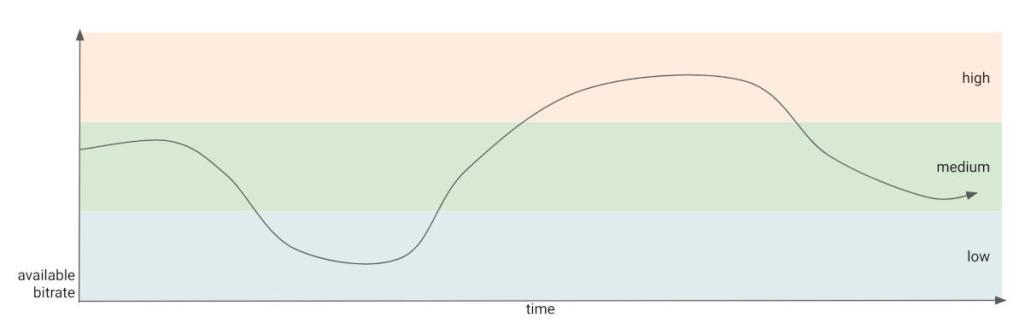

With ABR, you segment bandwidth into ranges. Each range will be receiving a different media stream. Each such stream has a different bitrate.

Say you have a media stream of 300kbps – you define the segment bandwidth for it as 300-500kbps. Why? Because from 500kbps there’s another media stream available.

These media streams all contain the same content, just in different bitrates, denoting different quality levels. What you try doing is sending the highest quality range to each viewer without getting into that dreaded buffering state. Since the available bitrate is dynamic in nature (as the illustration above shows), you can end up switching across media streams based on the bitrate available to the viewer at any given point in time. That’s why they call it adaptive.

And it sounds rather similar to simulcast… just on the server side, as ABR is something a server generates – the original media gets to a server, which creates multiple output streams to it in different bitrates, to use when needed.

The ABR challenge for WebRTC media servers

Recently, I’ve seen more discussions and solutions looking at using ABR and similar techniques with WebRTC. Mainly to scale a session beyond 10k viewers and to support low latency broadcasting in CDNs.

Why these two areas?

- Because beyond 10k viewers, simulcast isn’t enough anymore. Simulcast today supports up to 3 media streams and the variety you get with 10k viewers is higher than that. There are a few other reasons as well, but that’s for another time

- Because CDNs and video streaming have been comfortable with ABR for years now, so them shifting towards WebRTC or low latency means they are looking for much the same technologies and mechanisms they already know

But here’s the problem.

We’ve been doing SFUs with WebRTC for most of the time that WebRTC existed. Around 7-8 years. We’re all quite comfortable now with the concept of paying on bandwidth and not eating too much CPU – which is the performance profile of an SFU.

WebRTC Simulcast fits right in

Simulcast fits right into that philosophy – the one creating the alternate streams is the client and not the SFU – it is sending more media towards the SFU who now has more options. The client pays the price of higher bitrates and higher CPU use.

ABR places that burden on the server, which needs to generate the additional alternate streams on its own, and it needs to do so in real time – there’s no offline pre-processing activity for generating these streams from a pre-existing media file as there is with CDNs. this means that SFUs now need to think about CPU loads, muck around with transcoding, experiment with GPU acceleration – the works. Things they haven’t done so far.

Is this in our future? Sure it is. For some, it is already their present.

Simulcast, ABR – need a quick reference to understand their similarities and differences? Download the free cheatsheet:

What’s next?

WebRTC is growing and evolving. The ecosystem around it is becoming much richer as time goes by. Today, you can find different media servers of different types and characteristics, and the solutions available are quite different from one another.

If you are planning on developing your own application using a media server – make sure you pick a media server that fits to your use case.

If you want to dig deeper and learn more about WebRTC (and simulcast) then check out my online WebRTC courses.

In case of Video on demand or static video streaming, the server relies on triggers such as user switching the video quality or user clicking on the video segment which has not be sent by server yet and forces the entire process to restart like sending of main frame as per new dynamic requirements, such challenges will not be there I guess when you talk about ABR in webrtc servers or any other streaming servers as it would rely more on the bandwidth estimation and send the streams accordingly. Does that make it easier to do ABR in WebRTC ? Thoughts ?

With VOD or static video streaming it is slightly different – today much of the effort there is also about the network quality of the viewer since you don’t want him to experience buffering incidents if you can help it. Which is why ABR is employed – static content is then generated in multiple bitrates in small chunks (2 seconds or so usually).

Theoretically, you can try to do the same for WebRTC as an ABR solution, deciding on “chunk” sizes or defining these chunks dynamically while looking across all your viewer population.

Because there’s not much in the way of ABR today in WebRTC media servers, doing it there is harder – especially considering that you’ll need to do things dynamically as well and not only offline by creating static files.

Hi Tsahi,

It might be worth reviewing how we implemented ABR for WebRTC using Red5 Pro. https://www.red5pro.com/docs/autoscale/transcoder.html#red5-pro-autoscaling—transcoding-and-abr

We don’t actually transcode at the edge server, instead we use the variants provided by either the encoder hardware directly, or our transcoder node in the deployment. We then just deliver the best stream based on REMB coming over the RTCP channel.

Thanks for sharing this Chris – I’ll definitely take a look!

Thanks very much for your post. But I am still a little bit confused about the ABR algorithm used in WebRTC. As far as I know, WebRTC implements Google Congestion Control (GCC), which couples transport protocol and video codec. I think GCC can be treated as an ABR algorithm to some extend. So my question is: is there any necessity to implement an application-level ABR/simulcast algorithm based on WebRTC?

This would be an application level implementation.