As many as you like. You can cram anywhere from one to a million users into a WebRTC call.

You’ve been asked to create a group video call, and obviously, the technology selected for the project was WebRTC. It is almost the only alternative out there and certainly the one with the best price-performance ratio. Here’s the big question: How many users can we fit into that single group WebRTC call?

Need to understand your WebRTC group calling application backend? Take this free video mini-course on the untold story of WebRTC’s server side.

At least once a week I get approached by someone saying WebRTC is peer-to-peer and asking me if you can use it for larger groups, as the technology might not fit for such use cases. Well… WebRTC fits well into larger group calls.

You need to think of WebRTC as a set of technological building blocks that you mix and match as you see fit, and the browser implementation of WebRTC is just one building block.

The most common building block today in WebRTC for supporting group video calls is the SFU (Selective Forwarding Unit). a media router that receives media streams from all participants in a session and decides who to route that media to.

What I want to do in this article, is review a few of the aspects and decisions you’ll need to take when trying to create applications that support large group video sessions using WebRTC.

Analyze the Complexity

The first step in our journey today will be to analyze the complexity of our use case.

With WebRTC, and real time video communications in general, we will all boil down to speeds and feeds:

- Speeds – the resolution and bitrate we’re expecting in our service

- Feeds – the stream count of the single session

Let’s start with an example.

Assume you want to run a group calling service for the enterprise. It runs globally. People will join work sessions together. You plan on limiting group sessions to 4 people. I know you want more, but I am trying to keep things simple here for us.

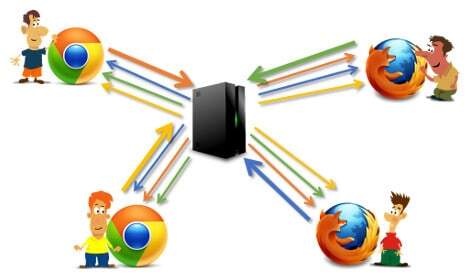

The illustration above shows you how a 4 participants conference would look like.

Magic Squares: 720p

If the layout you want for this conference is the magic squares one, we’re in the domain of:

You want high quality video. That’s what everyone wants. So you plan on having all participants send out 720p video resolution, aiming for WQHD monitors (that’s 2560×1440). Say that eats up 1.5Mbps (I am stingy here – it can take more), so:

- Each participant in the session sends out 1.5Mbps and receives 3 streams of 1.5Mbps

- Across 4 participants, the media server needs to receive 6Mbps and send out 18Mbps

Summing it up in a simple table, we get:

| Resolution | 720p |

| Bitrate | 1.5Mbps |

| User outgoing | 1.5Mbps (1 stream) |

| User incoming | 4.5Mbps (3 streams) |

| SFU outgoing | 18Mbps (12 streams) |

| SFU incoming | 6Mbps (4 streams) |

Magic Squares: VGA

If you’re not interested in resolution that much, you can aim for VGA resolution and even limit bitrates to 600Kbps:

| Resolution | VGA |

| Bitrate | 600Kbps |

| User outgoing | 0.6Mbps (1 stream) |

| User incoming | 1.8Mbps (3 streams) |

| SFU outgoing | 7.2Mbps (12 streams) |

| SFU incoming | 2.4Mbps (4 streams) |

The thing you may want to avoid when going VGA is the need to upscale the resolution on the display – it can look ugly, especially on the larger 4K displays.

With crude back of the napkin calculations, you can potentially cram 3 VGA conferences for the “price” of 1 720p conference.

Hangouts Style

But what if our layout is a bit different? A main speaker and smaller viewports for the other participants:

I call it Hangouts style, because Hangouts is pretty known for this layout and was one of the first to use it exclusively without offering a larger set of additional layouts.

This time, we will be using simulcast, with the plan of having everyone send out high quality video and the SFU deciding which incoming stream to use as the dominant speaker, picking the higher resolution for it and which will pick the lower resolution.

You will be aiming for 720p, because after a few experiments, you decided that lower resolutions when scaled to the larger displays don’t look that good. You end up with this:

- Each participant in the session sends out 2.2Mbps (that’s 1.5Mbps for the 720p stream and the additional 700Kbps for the other resolutions you’ll be simulcasting with it)

- Each participant in the session receives 1.5Mbps from the dominant speaker and 2 additional incoming streams of ~300Kbps for the smaller video windows

- Across 4 participants, the media server needs to receive 8.8Mbps and send out 8.4Mbps

| Resolution | 720p highest (in Simulcast) |

| Bitrate | 150Kbps – 1.5Mbps |

| User outgoing | 2.2Mbps (1 stream) |

| User incoming | 1.5Mbps (1 stream)

0.3Mbps (2 streams) |

| SFU outgoing | 8.4Mbps (12 streams) |

| SFU incoming | 8.8Mbps (4 streams) |

This is what have we learned:

Different use cases of group video with the same number of users translate into different workloads on the media server.

And if it wasn’t mentioned specifically, simulcast works great and improves the effectiveness and quality of group calls (simulcast is what we used in our Hangouts Style meeting).

Across the 3 scenarios we depicted here for 4-way video call, we got this variety of activity in the SFU:

| Magic Squares: 720p | Magic Squares: VGA | Hangouts Style | |

| SFU outgoing | 18Mbps | 7.2Mbps | 8.4Mbps |

| SFU incoming | 6Mbps | 2.4Mbps | 8.8Mbps |

Here’s your homework – now assume we want to do a 2-way session that gets broadcasted to 100 people over WebRTC. Now calculate the number of streams and bandwidths you’ll need on the server side.

How Many Users Can be Active in a WebRTC Call?

That’s a tough one.

If you use an MCU, you can get as many users on a call as your MCU can handle.

If you are using an SFU, it depends on a 3 different parameters:

- The level of sophistication of your media server, along with the performance it has

- The power you’ve got available on the client devices

- The way you’ve architected your infrastructure and worked out cascading

We’re going to review them in a sec.

Same Scenario, Different Implementations

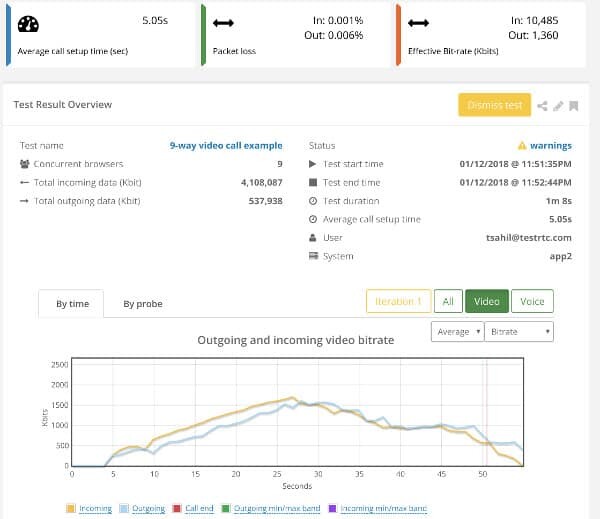

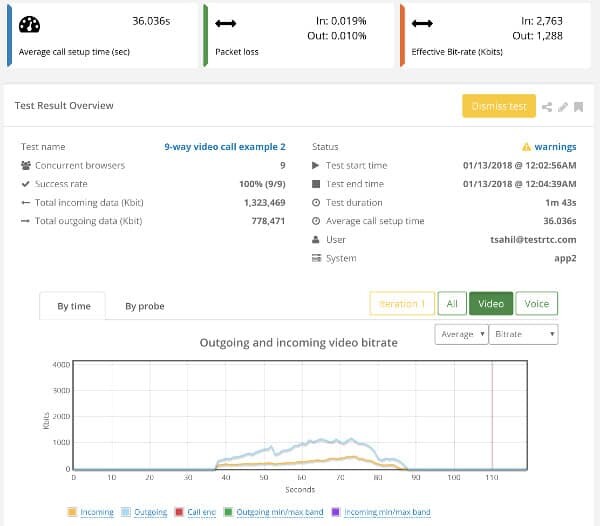

Anything about 8-10 users in a single call becomes complicated. Here’s an example of a publicly available service I want to share here.

The scenario:

- 9 participants in a single session, magic squares layout

- I use testRTC to get the users into the session, so it is all automated

- I run it for a minute. After that, it kills the session since it is a demo

- It takes into account that with 9 people on the screen, reducing resolutions for all to VGA, but it allocates 1.3Mbps for that resolution

- Leading to the browsers receiving 10Mbps of data to process

The media server decided here how to limit and gauge traffic.

And here’s another service with an online demo running the exact same scenario:

Now the incoming bitrate on average per browser was only 2.7Mbps – almost a fourth of the other service.

Same scenario. Different implementations.

What About Some Popular Services?

What about some popular services that do video conferencing in an SFU routed model? What kind of size restrictions do they put on their applications?

Here’s what I found browsing around:

- Google Hangouts – up to 25 participants in a single session. It was 10 in the past. When I did my first-ever office hour for my WebRTC training, I maxed out at 10, which got me to start using other services

- Hangouts Meet – placed its maximum number at 50 participants in a single session

- Houseparty – decided on 8 participants

- Skype – 25 participants

- appear.in – their PRO accounts support up to 12 participants in a room

- Amazon Chime – 16 participants on the desktop and up to 8 participants on iOS (no Android support yet)

- Atlassian Stride and Meet Jitsi – 50 participants

Does this mean you can’t get above 50?

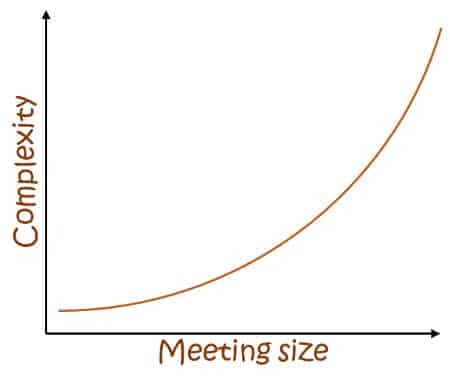

My take on it is that there’s an increasing degree of difficulty as the meeting size increases:

The CPaaS Limit on Size

When you look at CPaaS platforms, those supporting video and group calling often have limits to their meeting size. In most cases, they give out an arbitrary number they have tested against or are comfortable with. As we’ve seen, that number is suitable for a very specific scenario, which might not be the one you are thinking about.

In CPaaS, these numbers vary from 10 participants to 100’s of participants in a single sesion. Usually, if you can go higher, the additional participants will be view-only.

Key Points to Remember

Few things to keep in mind:

- The higher the group size the more complicated it is to implement and optimize

- The browser needs to run multiple decoders, which is a burden in itself

- Mobile devices, especially older ones, can be brought down to their knees quite quickly in such cases. Test on the oldest, puniest devices you plan on supporting before determining the group size to support

- You can build the SFU in a way that it doesn’t route all incoming media to everyone but rather picks partial data to send out. For example, maybe only a single speaker on the audio channels, or the 4 loudest streams

Sizing Your Media Server

Sizing and media servers is something I have been doing lately at testRTC. We’ve played a bit with Kurento in the past and are planning to tinker with other media servers. I get this question on every other project I am involved with:

How many sessions / users / streams can we cram into a single media server?

Given what we’ve seen above about speeds and feeds, it is safe to say that it really really really depends on what it is that you are doing.

If what you are looking for is group calling where everyone’s active, you should aim for 100-500 participants in total on a single server. The numbers will vary based on the machine you pick for the media server and the bitrates you are planning per stream on average.

If what you are looking for is a broadcast of a single person to a larger audience, all done over WebRTC to maintain low latency, 200-1,000 is probably a better estimate. Maybe even more.

Big Machines or Small Machines?

Another thing you will need to address is on which machines are you going to host your media server. Will that be the biggest baddest machines available or will you be comfortable with smaller ones?

Going for big machines means you’ll be able to cram larger audiences and sessions into a single machine, so the complexity of your service will be lower. If something crashes (media servers do crash), more users will be impacted. And when you’ll need to upgrade your media server (and you will), that process can cost you more or become somewhat more complicated as well.

The bigger the machine, the more cores it will have. Which results in media servers that need to run in multithreaded mode. Which means they are more complicated to build, debug and fix. More moving parts.

Going for small machines means you’ll hit scale problems earlier and they will require algorithms and heuristics that are more elaborate. You’ll have more edge cases in the way you load balance your service.

Scale Based on Streams, Bandwidth or CPU?

How do you decide that your media server achieved full capacity? How do you decide if the next session needs to be crammed into a new machine or another one or be placed on the current media server you’re using? If you use the current one, and new participants want to join a session actively running in this media server, will there be room enough for them?

These aren’t easy questions to answer.

I’ve see 3 different metrics used to decide on when to scale out from a single media server to others. Here are the general alternatives:

Based on CPU – when the CPU hits a certain percentage, it means the machine is “full”. It works best when you use smaller machines, as CPU would be one of the first resources you’ll deplete.

Based on Bandwidth – SFUs eat up lots of networking resources. If you are using bigger machines, you’ll probably won’t hit the CPU limit, but you’ll end up eating too much bandwidth. So you’ll end up determining the capacity available by way of bandwidth monitoring.

Based on Streams – the challenge sometimes with CPU and Bandwidth is that the number of sessions and streams that can be supported may vary, depending on dynamic conditions. Your scaling strategy might not be able to cope with that and you may want more control over the calculations. Which will lead to you sizing the machine using either CPU or bandwidth, but placing rules in place that are based on the number of streams the server can support.

–

The challenge here is that whatever scenario you pick, sizing is something you’ll need to be doing on your own. I see many who come to use testRTC when they need to address this problem.

Cascading a Single Session

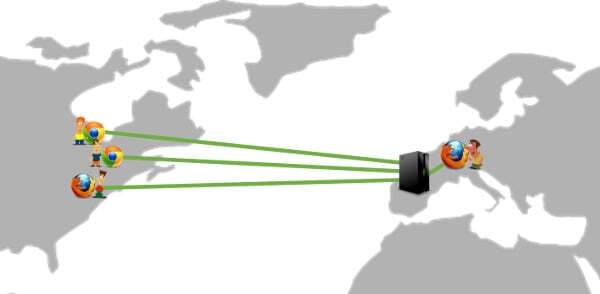

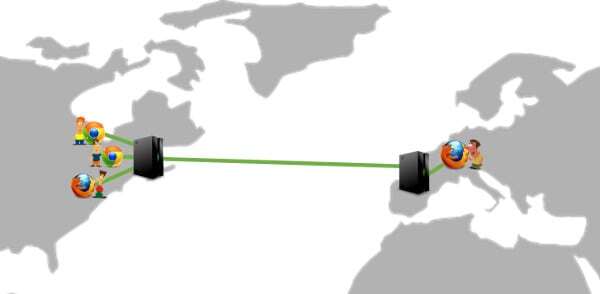

Cascading is the process of connecting one media server to another. The diagram below shows what I mean:

We have a 4-way group video call that is spread across 3 different media servers. The servers route the media between them as needed to get it connected. Why would you want to do this?

#1 – Geographical Distribution

When you run a global service and have SFUs as part of it, the question that is raised immediately is for a new session, which SFU will you allocate for it? In which of the data centers? Since we want to get our media servers as close as possible to the users, we either have pre-knowledge about the session and know where to allocate it, or decide by some reasonable means, like geolocation – we pick the data center closest to the user that created the meeting.

Assume 4 people are on a call. 3 of them join from New York, while the 4th person is from France. What happens if the French guy joins first?

The server will be hosted in France. 3 out of 4 people will be located far from the media server. Not the best approach…

One solution is to conduct the meeting by spreading it across servers closest to each of the participants:

We use more server resources to get this session served, but we have a lot more control over the media routes so we can optimize them better. This improved media quality for the session.

#2 – Fragmented Allocations

Assume that we can connect up to 100 participants in a single media server. Furthermore, every meeting can hold up to 10 participants. Ideally, we won’t want to assign more than 10 meetings per media server.

But what if I told you the average meeting size is 2 participants? It can get us to this type of an allocation:

This causes a lot of wasted server resources. How can we solve that?

- By having people commit in advance to the maximum meeting size. Not something you really want to do

- Taking a risk, assume that if you allocate 50% of a server’s capacity, the rest of the capacity you leave for existing meetings allowing them to grow. You still have wasted resources, but to a lower degree. There will be edge cases where you won’t be able to fill out the meetings due to server resources

- Migrating sessions across media servers in an effort to “defragment” the servers. It is as ugly as it sounds, and probably just as disrupting to the users

- Cascade sessions. Allow them to grow across machines

That last one of cascading? You can do that by reserving some of a media server’s resources for cascading existing sessions to other media servers.

#3 – Larger Meetings

Assuming you want to create larger meetings than one a single media server can handle, your only choice is to cascade.

If your media server can hold 100 participants and you want meetings at the size of 5,000 participants, then you’ll need to be able to cascade to support them. This isn’t easy, which explains why there aren’t many such solutions available, but it definitely is possible.

Mind you, in such large meetings, the media flow won’t be bidirectional. You’ll have fewer participants sending media and a lot more only receiving media. For the pure broadcasting scenario, I’ve written a guest post on the scaling challenges on Red5 Pro’s blog.

Recap

We’ve touched a lot of areas here. Here’s what you should do when trying to decide how many users can fit in your WebRTC calls:

- Whatever meeting size you have in mind it is possible to support with WebRTC

- It will be a matter of costs and aligning it with your business model that will make or break that one

- The larger the meeting size, the more complex it will be to get it done right, and the more limitations and assumptions you’ll need to add to the equation

- Analyze the complexity you need to support

- Count the incoming and outgoing streams to each device and media server

- Decide on the video quality (resolution and bitrate) for each stream

- Define the media server you’ll be using

- Select a machine type to run the media server on

- Figure out the sizing needed before you reach scale out

- Check if the growth is linear on the server’s resources

- Decide if you scale out based on bandwidth, CPU, streams count or anything else

- Figure how cascading fits into the picture

- Offer with it better geolocation support

- Assist in resource fragmentation on the cloud infrastructure

- Or use it to grow meetings beyond a single media server’s capacity

What’s the size of your WebRTC meetings?

Need to understand your WebRTC group calling application backend? Take this free video mini-course on the untold story of WebRTC’s server side.

Need More?

- If you are thinking of optimizing your application for large meetings, then read this WebRTC CPU requirements article

- Need some more suggestions for optimizing group calls? Check out my eBook: Optimizing Group Video Calling in WebRTC

- More into scaling the whole service? Read this eBook: Best Practices in Scaling WebRTC Deployments

I am using webrtc to create online audio and video broadcasting without media server ,but customer is now asking how many listeners will be able to hear and see the broadcast without slowness. I couldn’t find an answer , am I using the right technology ? And if yes what is the answer to that question ?

I guess you should read this one for an answer: https://bloggeek.me/media-server-for-webrtc-broadcast/

I consider making a vod system using mcu of webrtc.

Is it possible to create a vod system using mcu?

If not, please tell me why and why not.

Yoon,

In general, I wouldn’t use WebRTC for a VOD system. VOD suggests non-realtime, so WebRTC would be a hindrance instead of an advantage.

Also note that MCU is really expensive to operate and run, so I’d refrain from going towards that architecture unless there’s a good reason (i.e – I can’t do it in any other way).

Hello well im planning to use webrtc on my website where i can connect with other peer who are on different country or states.

1.now i need to know whether i want to build my own server for business.

2.how to configure my own server.

3.What all servers i need to implement so i can use webrtc.

right now i need only screen share with audio

please help me to implement on my website

Not sure this fits into a comment and the answer definitely won’t.

I have an online course on my site that you can enroll for and there is enough information on the web to get you going. Good luck!

I am planning on buying this LiveSmart Video Chat plugin and the provider recommended this article – which is great + thanks again… My application is 1 to many broadcast everyone active limited to 1 broadcaster and 15 subscribers per stream. There can be 100 streams at anytime. My webhost VODAHOST boasts 6+gb in bandwidth and using the Magic Squares: 720p for 1-8 users and Magic Squares: VGA for 9-16 users (as the application demands that the resolution get smaller after 4 users) I shouldn’t have a problem – right? Thanks again for the article. I just signed up for the course – any comments to this post appreciated in advance!!

Paul,

You should check with your provider what it is they support. Different implementations offer different capabilities.

I am developing an app for live chat and video conference here I am facing quit difficulty while implementation how we can separate user and participates.

Thanks,

Team Shubhbandh

You should probably post this on discuss-webrtc. When you do, make sure to include more information since with what you’ve provided there’s not much to say.

Intending to create a one to many website (1 teacher and 100 students) with only audio, class room style. Is WebRTC suitable for this?

Yes

Hi. Thank you for this amazing tutorial. It helped me a lot.

Recently, we’ve been working on a project that contains:

30 classes that every classes has 25 students and one teacher.

every students sends 128*128 pixel with 5fps video and teacher sends 720p with 24fps video and voice.

Student: (128*128*16bit) or (256*256*8bit)

Teacher: (720*1280*16bit) + (96kbps)

and all 30 classes should work simultaneously.

Could you tell me I can run this media server on a VPS?

As you said in this tutorial I can split every 3 classes and allocate one small VPS to them. But I don’t know how I can pick the VPS’s CPU and RAM.

For example, a VPS with 2 CPU and 2G RAM is good enough?

Ali,

These kinds of things can be tested and validated using https://testrtc.com

I don’t really know the exact answer as each use case and scenario is different and each server configuration is different as well. Only way to know is to conduct tests.

If I have more than 20 users. Can I limit 8 to 9 video streams at a time? And show other streams on the scroll. So is there a way where I can stop remote streams locally and activate again when they are scrolled? And would It be an efficient way to manage the video streams to take up less CPU usage?

Sure you can.

Doing that also means shutting down the connections/streams that aren’t being displayed and reconnecting them as needed.

It is one of a variety of techniques you will need to employ in order to scale a call to a large number of users. I cover these in details in this ebook: https://webrtccourse.com/product/scale-webrtc-group-call/

Hi Tsahi. Thanks for this information. It’s great!

My question is, we have a videochat webapp for up to 8 people. Is there a way to test the performance of the platform other than having 8 of my friends jump into a video call with me to test it every time we deploy a new version? My friends are getting annoyed with me lol

Thanks a lot!

Sure Abner.

You can automate it using tools like Selenium and other open source frameworks. You can also use testrtc.com (I am the CEO and co-founder) to get as many participants into the videochat/server/service as you want.

Hell Tsahi,

Is there any samples available for android video conferencing with WebRTC?

I am sure there are a few available on github. Jitsi has their mobile app open sourced for example

How can we implement mix-minus (where caller can’t hear his own audio) in a webRTC conferencing for about 8 people.

Use Freeswitch or Asterisk – they do that already.

That’s assuming what you want to do is mix the audio on the server-side.

What about pure p2p with no servers in between? Can the client code on each participant be smart enough to do this realistically? I know there will be more total connections since each pair needs to communicate with each other so it will scale poorly with more participants. But it seems like it would be cool and lowest cost to have a full p2p mesh.

I’m a novice to web streaming/conferencing/webRTC so apologies if this is a silly question.

Realistically, don’t expect p2p to work well above 4 participants.

More realistically, don’t expect p2p to work well above 2-3 participants for many of your users.

The challenge is the CPU and network requirements of P2P which are oftentimes above what real users have available.

Can we add more than 200 users in one call and users can be from different different countries.

Yes, but you’ll need to work hard to make this happen smoothly and nicely in your service.

1) how can i make blur or transparent background in webrtc video call or conference

2) how to show participant list so that on click any participant, view their screen

See https://ai.googleblog.com/2020/10/background-features-in-google-meet.html for background replacement.

As for the 2nd, this is an application logic implementaiton.

What is ML in this page link, any source code that i can use.

ML stands for machine learning. And doing background replacement is relatively new. You will need to research it in order to use it, as there won’t be an easy ready-made solution out there.

is this ML in javascript,

any tutorial what is exactly ML , how can i create it any helpful tutorial

I am sure you are capable of searching the Internet for answers. This article is focused on scaling a WebRTC call and not in machine learning or troubleshooting video rotation issues.

I am sure you are capable of searching the Internet for answers. This article is focused on scaling a WebRTC call and not in machine learning or troubleshooting video rotation issues.

1) any tutorial for ML

2 )Is ML in javascript

3) When I Fullscreen my video is rotating to 180deg , how to prevent webrtc video from rotate

Hi Tsahi. The post contains a lot of information. Thank you.

I just have one question in the “larger meetings” section. Supposing that we have two media servers which split a lot of participants in one conference, how can we expand the meeting if in one media server still have the same amount of incoming streams and outcoming streams?

For eg,

Scenario 1: I have media server A which receives all 150 incoming streams from 150 participants => Summary will be 300 streams

Scenario 2: I have media server A which receives 100 incoming streams from 100 participants, and media server B which receives 50 incoming streams from 50 participants

=> Media server A plus 100 outcoming streams to media server B and 50 incoming streams from media server B => Summary will still be 200 + 100 streams

But according to the need of scaling, media server A in scenario 2 must be weaker than the media server A in scenario 1. So how can we achieve larger meetings with two media servers?

Thank you.

In larger meetings you start off by optimizing the client side to be able to conduct that at all.

Showing more than 50 people on the screen is not achievable with WebRTC today using an SFU (I haven’t seen anyone doing that above these numbers and even reaching 50 is challenging).

Handling audio for more participants is possible, but makes use of many optimizations a well.

The need to split a meeting between multiple servers is something I’d do only for large broadcasts or when you want to optimize for geographic spread of users.

Thank you for your response.

I want to refer large meetings particularly. The question is the number of incoming and outcoming streams in one media server is still the same in both scenarios. So how can splitting a meeting between multiple servers increase the participant limits? As stated in your article: “If your media server can hold 100 participants and you want meetings at the size of 5,000 participants, then you’ll need to be able to cascade to support them”.

Thank you for supporting me.

In a broadcast, you have a single stream going out to many.

If a server can handle 100 streams, then that single stream can be sent by the server to 100 servers, each managing 100 outgoing streams to a total of 10k. This is a simplification, but that’s the way to think of it.

For a video conference you won’t need more than 50-100 video streams in total. No one else will be seen by others anyway. In such cases, you can decide which of the streams to even send to the servers and route between them – and you are again back to something akin to the broadcast example above.

At times, it might be simpler to multiplex the videos and combine them into a single stream, getting you again to the broadcast scenario.

Hi

Article is very helpful. Thank you for this effort.

I am working on a social native app. I completed private call (P2P) using webrtc “RTCPeerConnection” connection for each user. Now I want to make a group call for this social app. Please guide me which architecture will be good for this scenario. Remember all participants stream should be visible as in WhatsApp group. Participants can be from 5-10.

Is mesh approach will be will fit or something else?

Hammad,

You will need a media server. There are many articles on my site and elsewhere to give you guidance.

Hello Tsahi,

I would like to ask for your advice please.

I have built a card game web app for 8 players and lately I was thinking about using WebRTC P2P to get the players watch and talk to each other while playing. So 8 videos that each should be 100X100 pixels big.

Should I use P2P or a media server?

Thanks in advance.

That’s a good question and an edge case…

A media server would garner better results. Especially when devices have less available CPU or bandwidth is scarce.

At such low resolutions, I am tempted to say it would be interesting to see if you can get it to perform well with 8 players in P2P mesh configuration.

Hello Tsahi,

Great post.

I would like to develop a Mafia (Werewolf) game between 10 users. Only in voice and no video. So users speak in turn and only one speaker at a time. Can you please tell me if it is possible to do this using P2P topology and what tricks may I use to optimize it?

Thanks

Ali,

I am not aware of anyone that does 10 users mesh with WebRTC. I do know of vendors who arrived at 6 or 8. 10 is likely possible, though I wouldn’t go there directly given a choice.

Two thinks that came to mind that you might want to try here:

1. Mute everyone, ending up with something like broadcast each time someone speaks and use WebRTC for this. It may alleviate some of the pains

2. Use MediaRecorder API instead, and then send the data over a data channel or similar to the other participants, adding some delay to the communication itself, but “solving” the quality problems

Also remember that you will still be needing TURN servers for some users on some networks.