There are multiple ways to implement WebRTC multiparty sessions. These in turn are built around mesh, mixing and routing.

In the past few days I’ve been sick to the bone. Fever, headache, cough – the works. I couldn’t do much which meant no writing an article either. Good thing I had to remove an appendix from my upcoming WebRTC API Platforms report to make room for a new one.

Table of contents

Architectures in WebRTC

I wanted to touch the topic of Flow and Embed in Communication APIs, and how they fit into the WebRTC space. This topic will replace an appendix in the report about multiparty architectures in WebRTC, which is what follows here – a copy+paste of that appendix:

Multiparty conferences of either voice or video can be supported in one of three ways:

- Mesh

- Mixing

- Routing

The quality of the solution will rely heavily on the different type of architecture used. In Routing, we see further refinement for video routing between multi-unicast, simulcast and SVC.

WebRTC API Platform vendors who offer multiparty conferencing will have different implementations of this technology. For those who need multiparty calling, make sure you know which technology is used by the vendor you choose.

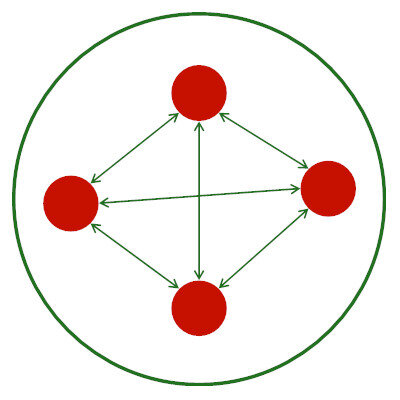

Mesh

In a mesh architecture, all users are connected to all others directly and send their media to them. While there is no overhead on a media server, this option usually falls short of offering any meaningful media quality and starts breaking from 4 users or more.

For the most part, consider vendors offering mesh topology for their video service as limited at best.

There’s more to know about WebRTC P2P mesh architecture.

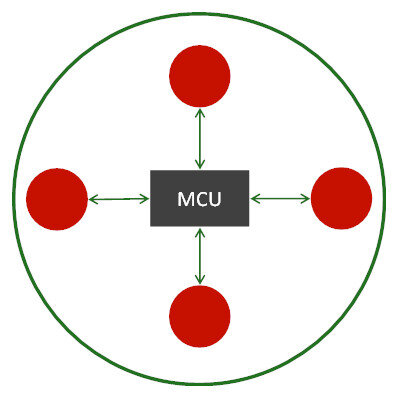

Mixing

MCUs were quite common before WebRTC came into the market. MCU stands for Multipoint Conferencing Unit, and it acts as a mixing point.

An MCU is a WebRTC media server that receives the incoming media streams from all users, decodes it all, creates a new layout of everything and sends it out to all users as a single stream.

This has the added benefit of being easy on the user devices, which see it as a single user they need to operate in front; but it comes at a high compute cost and an inflexibility on the user side.

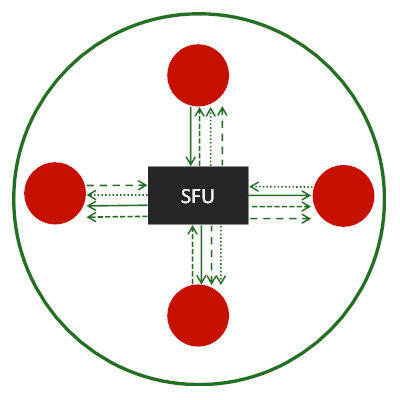

Routing

SFUs were new before WebRTC, but are now an extremely popular solution. SFU stands for Selective Forwarding Unit, and it acts like a router of media.

An SFU is a WebRTC media server that receives the incoming media streams from all users, and then decides which streams to send to which users.

This approach leaves flexibility on the user side while reducing the computational cost on the server side; making it the popular and cost effective choice in WebRTC deployments.

To route media, an SFU can employ one of three distinct approaches:

- Multi-unicast

- Simulcast

- SVC

Multi-unicast

This is the naïve approach to routing media. Each user sends his video stream towards he SFU, which then decide who to route this stream to.

If there is a need to lower bitrates or resolutions, it is either done at the source, by forcing a user to change his sent stream, or on the receiver end, by having the receiving user to throw data he received and processed.

It is also how most implementations of WebRTC SFUs were done until recently. [UPDATE: Since this article was originally written in 2017, that was true. In 2019, most are actually using Simulcast]

Simulcast

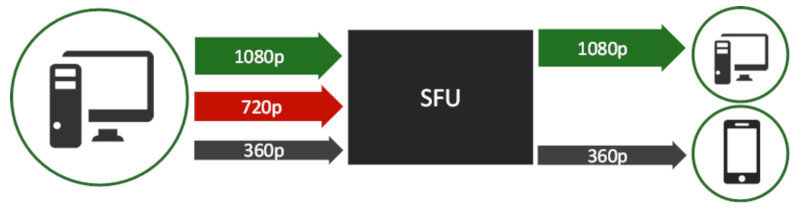

Simulcast is an approach where the user sends multiple video streams towards the SFU. These streams are compressed data of the exact same media, but in different quality levels – usually different resolutions and bitrates.

The SFU can then select which of the streams it received to send to which participant based on their device capability, available network or screen layout.

Simulcast has started to crop in commercial WebRTC SFUs only recently.

SVC

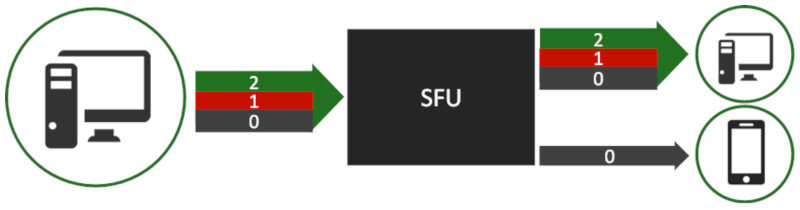

SVC stands for Scalable Video Coding. It is a technique where a single encoded video stream is created in a layered fashion, where each layer adds to the quality of the previous layer.

When an SFU receives a media stream that uses SVC, it can peel of layers out of that stream, to fit the outgoing stream to the quality, device, network and UI expectations of the receiving user. It offers better performance than Simulcast in both compute and network resources.

SVC has the added benefit of enabling higher resiliency to network impairments by allowing adding error correction only to base layers. This works well over mobile networks even for 1:1 calling.

SVC is very new to WebRTC and is only now being introduced as part of the VP9 video codec.

When you say most SFUs did only do multi-unicast until recently, when was that written?

2017… Told you I was sick this week. Missed that when I did another read of it prior to hitting “publish”.

Added an update in the body of the text – thanks for the catch!

It would be great if you can also analyse all the popular open source webrtc media servers for these techniques as to which ones are equiped with and which ones still need to implement. As always you articles are great at simplifying the mysterious world of webrtc. Thanks

Raghvendra, almost all open source WebRTC media servers today are SFU based in their nature. Some also offer MCU support (like Kurento).

I have 2 questions:

1) Only the Mesh topology is P2P or true WebRTC, the others can be implemented independent of WebRTC itself. Correct?

2) Would a mix of Mesh and MCU make sense? e.g. in a group call of 8 people, there are 2 mesh networks : 1-4, 5-8 and 4 and 5 act as MCUs connecting the 2 meshes ? What are your thoughts ?

Not sure what true WebRTC means…

The topology is just that – a topology. It can be used in a WebRTC implementation as well as in other VoIP technologies.

I wouldn’t use an MCU to begin with, and I don’t see how meshing and mixing in the way you suggest can work in real life.

Hi,

In case of SVC SFU, where each clients send layered frames to SFU, how does the signaling happens during call initiation. If a client A wants to connect to clients B and C, how does A sends its capabilities to B and C through SFU. How is SDP shared between A and B ,C. Does SDP pass through SFU or a separate server altogether.