WebRTC video quality requires some tweaking to get done properly. Lets see what levels we have in the form of bitrate, resolution and frame rate available to us.

Real time video is tough. WebRTC might make things a bit easier, but there are things you still need to take care of. Especially if what you’re aiming for is to squeeze every possible ounce of WebRTC video quality for your application to improve the user’s experience.

This time, I want to cover what levers we have at our disposal that affect video quality – and how to use them properly.

Table of contents

What affects video quality in WebRTC?

Video plays a big role in communication these days. A video call/session/meeting is going to heavily rely on the video quality. Obviously…

But what is it then that affects the video quality? Lets try and group them into 3 main buckets: out of our control, service related and device related. This will enable us to focus on what we can control and where we should put our effort.

Out of our control

There are things that are out of our control. We have the ability to affect them, but only a bit and only up to a point. To look at the extreme, if the user is sitting in Antarctica, inside an elevator, in the basement level somewhere, with no Internet connection and no cellular reception – in all likelihood, even if he complains that calls aren’t get connected – there’s nothing anyone will be able to do about it besides suggesting he moves himself closer to the Wifi access point.

The main two things we can’t really control? Bandwidth and the transport protocol that will be used.

👉 We can’t control the user’s device and its capabilities either, but most of the time, people tend to understand this.

Bandwidth

Bandwidth is how much data can we send or receive over the network. The higher this value is, the better.

The thing is, we have little to no control over it:

- The user might be far from his access point

- He may have poor reception

- Or a faulty cable

- There might be others using the same access point and flooding it with their own data

- Someone could have configured the firewall to throttle traffic

- …

None of this is in our control.

And while we can do minor things to improve this, such as positioning our servers as close as possible to the users, there’s not much else.

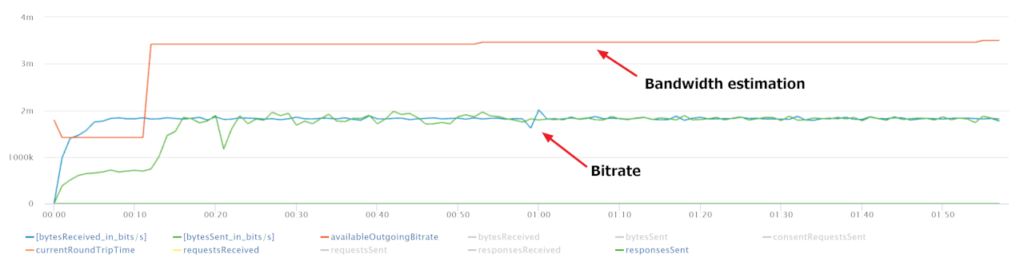

Our role with bandwidth is to as accurately as possible estimate it. WebRTC has mechanisms for bandwidth estimation. Why is this important? If we know how much bandwidth is available to us, we can try to make better use of it –

👉 Over-estimating bandwidth means we might end up sending more than the network can handle, which in turn is going to cause congestion (=bad)

👉 Under-estimating bandwidth means we will be sending out less data than we could have, which will end up reducing the media quality we could have provided to the users (=bad)

Transport protocol

I’ve already voiced my opinion about using TCP for WebRTC media and why this isn’t a good idea.

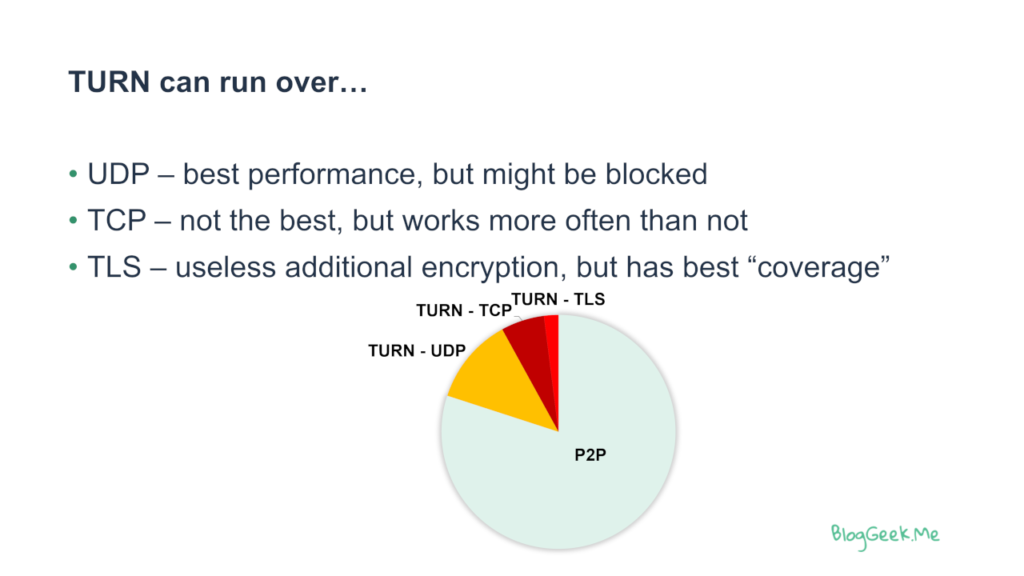

The thing is, you don’t really control what gets selected. For the most part, this is how the distribution of your sessions is going to look like:

- Most calls probably won’t need any TURN relay

- Most calls that need TURN relay, will do so over UDP

- The rest will likely do it over TCP

- And there’ll be those sessions that must have TLS

Why is that? Just because networks are configured differently. And you have no control over it.

👉 You can and should make sure the chart looks somewhat like this one. 90% of the sessions done over TURN/TCP should definitely raise a few red flags for you.

But once you reach a distribution similar to the above, or once you know how to explain what you’re seeing when it comes to the distribution of sessions, then there’s not much else for you to optimize.

Service related

Service related are things that are within our control and are handled in our infrastructure usually.This is where differentiation based on how we decided to architect and deploy our backend will come into play.

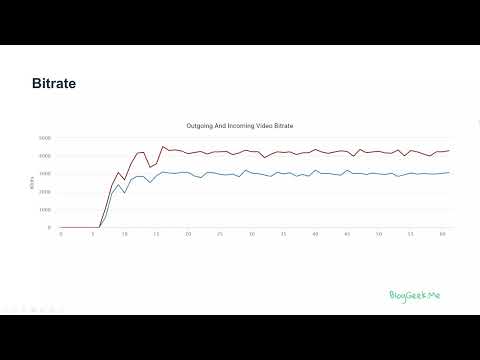

Bitrate

While bandwidth isn’t something we can control, bitrate is. Where bandwidth is the upper limit of what the network can send or receive, bitrate is what we actually send and receive over the network.

We can’t send more than what the bandwidth allows, and we might not always want to send the maximum bitrate that we can either.

Our role here is to pick the bitrate that is most suitable for our needs. What does that mean to me?

- Estimate the bandwidth available as accurately as possible

- This estimate is the maximum bitrate we can use

- Make use of as much of that bitrate as possible, as long as that gives us a quality advantage

👉 It is important to remember to understand that increasing bitrate doesn’t always increase quality. It can cause detrimental decreases in quality as well.

Here are a few examples:

- If the camera source we have is of VGA resolution (640×480), then there’s no need to send 2mbps over the network. 800kbps would suffice – more than that and we probably won’t see any difference in quality anyways

- The network might be able to carry 10mbps in the downlink, but receiving 10mbps in aggregate of incoming video data from 5 participants (2mbps each) will likely tax our CPU to the point of rendering it useless. In turn, this will actually cause frame drops and poor media quality

- Sending full HD video (1920×1080) and displaying it in a small frame on the screen because the content being shared in parallel is more important is wasteful. We are eating up precious network resources, decoder CPU and scaling down the image

There are a lot of other such cases as well.

So what do we do? I know, I am repeating myself, but this is critical –

- Estimate bandwidth available

- Decide our target bitrate to be lower or equal to the estimate

Codecs

Codecs affect media quality.

For voice, G.711 is bad, Opus is great. Lyra and Satin look promising as future alternatives/evolution.

With video, this is a lot more nuanced. You have a selection of VP8, VP9, H.264, HEVC and AV1.

Here are a few things to consider when selecting a video codec for your WebRTC application:

- VP8 and H.264 both work well and are widely known and used

- VP9 and HEVC give better quality than VP8 and H.264 on the same bitrate. All other things considered equal, and they never are

- AV1 gives better performance than all the other video codecs. But it is new and not widely supported or understood

- H.264 has more hardware acceleration available to it, but VP8 has temporal scalability which is useful

- Hardware acceleration is somewhat overrated at times. It might even cause headaches (with bugs on specific processors), but it is worth aiming for if there’s a real need

- For group sessions you’d want to use simulcast or SVC. These probably aren’t available with HEVC. Oh, and simulcast has a lot of details you need to be aware of

- HEVC will leave you in an Apple only world

- VP9 isn’t widely used and the implementation of SVC that it has is still rather proprietary, so you’ll have some reverse engineering to do here

- AV1 is new as hell. And it eats lots of CPU. It has its place, but then again, this is going to be an adventure (at least in the coming year or two)

👉 Choosing a video codec for your service isn’t a simple task. If you don’t know what you’re doing, just stick with VP8 or H.264. Experimenting with codecs is a great time waster unless you know your way with them.

Latency

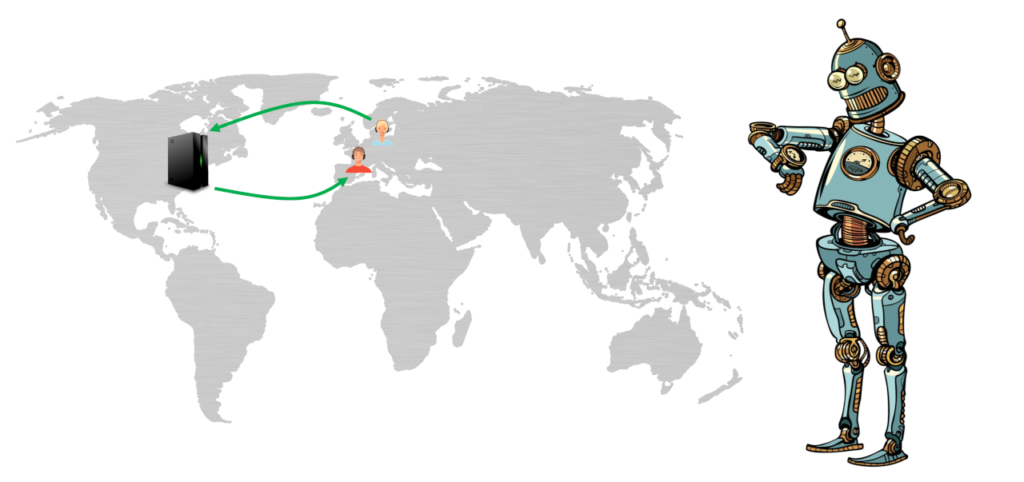

While we don’t control where users are – we definitely control where our servers are located. Which means that we can place the servers closer to the users, which in turn can reduce the latency (among other things).

Here are some things to consider here:

- TURN servers should be placed as close as possible to users

- In large group calls, we must have media servers

- If we use a single server per meeting, then all users must connect directly to it

- But if we distribute the media servers used for a single meeting, then we can connect users to media servers closer to where they are

- The faster we get the user’s data off the public network, the more control we have over the routing of the packets between our own servers

- The “shorter” the route from he user to our server is, the better the quality will be

- Shorter might not be a geographic distance

- We factor in bandwidth, packet loss, jitter and latency as the metrics we measure to decide on “shortest”

👉 Measure the latency of your sessions (through rtt). Try to reduce it for your users as much as possible. And assume this is an ongoing never-ending process

Here’s a session from Kranky Geek discussing latencies and media servers:

Looking at scale and servers

There’s a lot to be said about the infrastructure side in WebRTC. I tried to place these insights in an ebook that is relevant today more than ever – Best practices in scaling WebRTC deployments

Device related

You don’t get to choose the device your users are going to use to join their meetings. But you do control how your application is going to behave on these devices.

There are several things to keep in mind here that are going to improve the media quality for your users if done right on their device.

Available CPU

This should be your top priority. To understand how much CPU is being used on the user’s device and deciding when you’ve gone too far.

What happens when the device is “out of CPU”?

- The CPU will heat up. The fan will start to work busily and noisily on a PC. A mobile device would heat up. It will also start to have shorter battery life while at it. Interestingly, this is your smallest of worries here

- WebRTC won’t be able to encode or decode media frames, so it will start to skip them

- On the encoder side, this will mean a lower frame rate. Regrettable, but ok

- The decoder is where things will start to get messy:

- The decoder will drop frames and not try to decode them

- Since video frames are dependent on one another, this will mean the decoder won’t be able to continue to do what it does

- It will need a new I-frame and will ask for it

- That will lead to video freezes, rendering video useless

See also our article about the video quality metrics in WebRTC.

So what did we have here?

👉 You end up with poor video quality and video freezes

👉 The network gets more congested due to frequent requests for I-frames

👉 Your device heats up and battery life suffers

Your role here is to monitor and make sure CPU use isn’t too high, and if it is, reduce it. Your best tool for reducing CPU use is by reducing the bitrates you’re either sending and/or receiving.

Sadly, monitoring the CPU directly is impossible in the browser itself and you’ll need to find out other means of figuring out the state of the CPU.

Content type

With video, content and placement matter.

Let’s say you have 1,000kbps of “budget” to spend. That’s because the bandwidth estimator gives you that amount and you know/assume the CPU of both the sender and receiver(s) can handle that bitrate.

How do you spend that budget?

- You need to figure out the resolution you want to send. The higher the resolution the “better” the image will look

- How about increasing frame rate? Higher frame rate will give you smoother motion

- Or maybe just invest more bits on whatever it is you’re sending

WebRTC makes its own decisions. These are based on the bitrate available. It will automatically decide to increase or reduce resolution and frame rate to accommodate for what it feels is the best quality. You can even pass hints on your content type – do you value motion over sharpness or vice versa.

There are things that WebRTC doesn’t know on its own through:

- It knows what resolution you captured your content with (so it won’t try to send it at a higher resolution than that)

- But it has no clue what the viewers’ screen or window resolution is

- So it might send more than is needed, causing CPU and network losses on both ends of the session

- It isn’t aware if the content sent is important or less important, which can affect the decisions of how much to invest in bitrate to begin with

- Oh – and it makes its decisions on the device. If you have a media server that processes media, then all that goodness needs to happen in your media server and its own logic

It is going to be your job to figure out these things and place/remove certain restrictions of what you want from your video.

Optimizing large group calls

The bigger the meeting the more challenging and optimized your code will need to be in order to support it. WebRTC gives you a lot of powerful tools to scale a meeting, but it leaves a lot to you to figure out. This ebook will reveal these tools to you and enable you to increase your meeting sizes – Optimizing Group Video Calling in WebRTC

The 3-legged stool of WebRTC video quality

Video quality in WebRTC is like a 3-legged stool. With all things considered equal, you can tweak the bitrate, frame rate and resolution. At least that’s what you have at your disposal dynamically in real-time when you are in the middle of a session and need to make a decision.

Bitrate can be seen as the most important leg of the stool (more on that below).

The other two, frame rate and resolution are quite dependent on one another. A change in one will immediately force a change in the other if we wish to keep the image quality. Increasing or decreasing the bitrate can cause a change in both frame rate and resolution.

Follow the bitrate

I see a lot of developers start tweaking frame rates or resolutions. While this is admirable and even reasonable at times, it is the wrong starting point.

What you should be doing is follow the bitrate in WebRTC. Start by figuring out and truly understanding how much bitrate you have in your budget. Then decide how to allocate that bitrate based on your constraints:

- Don’t expect full HD quality for example if what you have is a budget of 300kbps in your bitrate – it isn’t doable

- If you have 800kbps you’ll need to decide where to invest them – in resolution or in frame rate

Always start with bitrate.

Then figure out the constraints you have on resolution and frame rate based on CPU, devices, screen resolution, content type, … and in general on the context of your session.

The rest (resolution and frame rate) should follow.

And in most cases, it will be preferable to “hint” WebRTC on the type of content you have and let WebRTC figure out what it should be doing. It is rather good at that, otherwise, what would be the point of using it in the first place?

Making a choice between resolution and frame rate

Once we have the bitrate nailed down – should you go for a higher resolution or a higher frame rate?

Here are a few guidelines for you to use:

- If your content is a slide deck or similar static content, you should aim for higher resolution at lower frame rate. If possible, go for VBR instead of the default CBR in WebRTC

- Assuming you’re in the talking-heads domain, a higher frame rate is the better selection. 30fps is what we’re aiming for, but if the bitrate is low, you will need to lower that as well. It is quite common to see services running at 15fps and still happy with the results

- Sharing generic video content from YouTube or similar? Assume frame rate is more important than resolution

- Showing 9 or more participants on the screen? Feel free to lower the frame rate to 15fps (or less). Also make sure you’re not receiving video at resolutions that are higher than what you’re displaying

- Interested in the sharpness of what is being shared? Aim for resolution and sacrifice on frame rate

Time to learn WebRTC

I’ve had my fair share of discussions lately with vendors who were working with WebRTC but didn’t have enough of an understanding of WebRTC. Often the results aren’t satisfactory, falling short with what is considered good media quality these days. All because of wrong assumptions or bad optimizations that backfired.

If you are planning to use WebRTC or even using WebRTC, then you should get to know it better. Understand how it works and make sure you’re using it properly. You can achieve that by enrolling in my WebRTC training courses for developers.

The title is pretty misleading. Where are the tweaks?

I don’t believe in giving set recipes. They tend not to work in complex systems.

This is why I try as much as possible to explain a topic along with the side effects on what each decision you make will have.

In the case of video quality, it is mostly about finding what your budget of bitrate is and going from there to decide on resolution and frame rate (as opposed to the other way around which I see happening too often).

Can you talk in future blogs about mediasoup!

I mention it here: https://bloggeek.me/webrtc-trends-for-2022/

Great article! This is the only place where I can find such detailed information on tweaking the video quality.

I am trying to better understand the mechanisms that WebRTC uses by default. For example does it prioritize fps over resolution or how does it determine the encoder bitrate?

Do you know where I could find more information on this? Or is it proprietary source code?

Regards,

Tim

Bitrate/babdwidth estimation is done using REMB and TWCC. Both are specs/drafts of the IETF.

In WebRTC, prioritization for webcams is more towards FPS (motion) and for screen sharing it is mostly resolution (quality). You can add constraints to getUserMedia() to change this for the webcam.

Great article. I was wondering about how bitrate/resolution/framerate affect the decoder burden – is it faster to decode a high-res low fps stream compared to a low-res high fps stream at the same bitrate, for instance? Or is decoding work mostly just a flat function of bitrate? I'm thinking about a many-to-one usecase so decoder burden is my priority.

That’s a good question and I don’t have an answer for.

If I had to guess, I’d say it depends on all of them. Bitrate is just the incoming stream. A decoder generates an outgoing stream of raw frames. The higher the frame rate, the more pixels are needed to be generated. The higher the resolution – the same.