Time to see what Google has in store for us with WebRTC.

At the Kranky Geek event two months ago, Google announced their roadmap for the rest of 2014. Besides being ambitious, it had a lot of interesting tidbits in it.

I wanted to try and decrypt the two roadmap slides of that event, to the best of my understanding.

Before I begin, some clarifications:

- I have no visibility into Google’s roadmap besides what was announced during the event. I could have asked, but decided to take this approach for various reasons

- I haven’t really followed closely what got into Chrome 36 that is now the default Chrome version out there or Chrome 37, that has a beta out already. So expect some discrepancies from the slides, to my decryption process to what we have in reality

- I decided to take this exercise because I think Google’s intentions here are quite telling

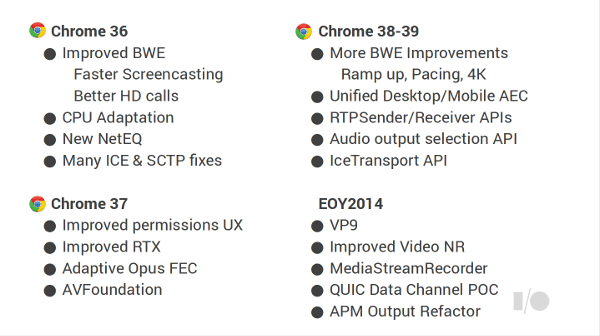

By Chrome Version

Chrome 36

Chrome 36 is the version we all run today (at least those with Chrome). Here’s what Google originally planned for this release:

Improved BWE (Faster screencasting and better HD calls)

Bandwidth Estimation (BWE) is the process of deciding how much bandwidth to allocate for a call. It is done dynamically, as the available bandwidth might change through the course of a video call due to external factors – other “consumers” in the same local network on either side of the call (email synchronization, Dropbox deciding to sync photos and videos from a smartphone, your kid playing an online game or watching YouTube/Netflix, …)

In this round, Google was focusing on the new screencasting technology it recently introduced. The difference for screencasting from webcams is the fact that while the screen is mostly static, when the view changes (=you switch a slide), the amount of changes grows considerably and needs to get transmitted properly. There are other heuristics at play on screencasting than there are on “talking heads”.

The better HD calls means the estimation algorithms used by Google needed to be optimized for 1Mbps and more bit rates – this usually means ramping up and down the bitrates much faster. To that end, Google decided to rewrite the whole bandwidth estimation component of WebRTC.

CPU Adaptation

When you run a video call, you end up eating a lot of your machine’s CPU. And in video encoding, there’s no reason not to invest more CPU in trying to crunch a bit more of the bitstream or improve the video quality a bit further – there’s no specific number of MIPS you use for encoders. And same as with bandwidth estimation, CPU is also a fluid resource that is used by many different consumers on your device – from antiviruses on your laptop to push notifications and other background processes on your smartphone. And then there’s all the other code running in the gazillion of open Chrome tabs.

To that end, a video encoder needs to gauge the CPU usage of a device and reduce the amount of juice it takes from the CPU based on what’s available – so it doesn’t end up suffocating everything else.

The fact that this hasn’t been part of the implementation up until now is a bit sad (it is also why some of the WebRTC API Platforms added their own adaptations to the CPU issue as part of their platform).

New NetEQ

Hmm…

From the WebRTC.org website:

A dynamic jitter buffer and error concealment algorithm used for concealing the negative effects of network jitter and packet loss. Keeps latency as low as possible while maintaining the highest voice quality.

GIPS’ NetEQ was best of breed 4 years ago when it was acquired by Google. It was on par with most other commercial offerings of its kind. But it seems it was time for a major overhaul and rewriting of the NetEQ component. Justin Uberti stated during the session that this is to improve stereo support.

Many ICE & SCTP fixes

Bug fixes is always a great thing. The ones in focus here are ICE related (NAT traversal) and SCTP (data channel). From the session, this was about standards compliance.

Chrome 37

Out in beta already, and Google have asked for feedback about the permissions UX part of it.

Improved permissions UX

The whole permissions experience was kind of broken in Chrome. When you call getUserMedia() and the browser asked the user to allow access to the camera and/or microphone? That’s the permission UX. Many users just missed it (myself included from time to time even today)

The overhaul is something that is greatly needed. The decision was to have a floating bubble for it. You can learn more about it in this link: https://groups.google.com/forum/#!topic/discuss-webrtc/0Oh7JYXyITg

Put that as a Chrome usability issue, which isn’t related only to WebRTC (and something other browsers may need to address as well).

Improved RTX

RTX stands for retransmission. It is done on the video side when using VP8. Retransmission is useless for most packets, as the packet that gets retransmitted will arrive too late. It is used to get packets of golden frames in VP8 which are used in the decoding of future packets as well.

The improvement here is in aligning it to the standard.

Adaptive Opus FEC

Opus, the voice codec in WebRTC, now gets to use its forward error correction capabilities in an adaptive manner – i.e – only when necessary.

That should get the audio quality of WebRTC even better than it already is.

AVFoundation

This one relates to Mac machines. There’s a change in the interface used to access devices (camera anyone?). End result – less crashes…

Chrome 38-39

This one is what we can expect in October-December timeframe.

More BWE Improvements (Ramp up, Pacing, 4K)

Bandwidth estimation is still an issue, even after the improvements on Chrome 36, so more is expected here. We will see such investments well into 2015 and 2016 as well.

Unified Desktop/Mobile AEC

Improvements in echo cancellation. This is a much needed work, especially on Android devices, where the operating system isn’t that accommodating to the real time nature of WebRTC (and VoIP in general).

RTPSender/Receiver APIs

New APIs! Essentially, providing per stream bitrate control mechanism. This should enhance the constraints mechanism that exists in WebRTC already.

Audio output selection API

Another usability feature – one that exist in many VoIP soft phones already.

IceTransport API

This is about enabling the creation of use cases where more control over ICE is required for privacy reasons – enforcing relay for example.

EOY2014

This is the wish list for WebRTC at Google. It is things the product managers want to fit into 2014, and most probably the developers are pushing back because of the huge workload they already have.

To be fair, there’s a lot of progress being made here – especially considering the fact that it all gets wrapped into Chrome…

If only one or two of the features below make it, that would be great.

VP9

Google is starting the migration from VP8 to VP9 in WebRTC. There is still no decided mandatory video codec for WebRTC, but Google wants to make sure it changes the game and the discussion.

People are starting to warm up towards using H.264 in WebRTC (just see what Firefox did with their latest nightly supporting H.264); so it is time to change the tune and place the competition between VP9 and H.265.

H.265 is just as new as VP9, and patents in H.265 are going to be more expensive and more of a headache than they are in H.264. These should keep Google ahead of the pack in the video debate while providing better video quality for developers.

Improved Video NR

Google is working hard on reducing the cost of computing. Chromebooks and the Android One initiative shows that clearly. Couple that with the huge install base of older machines with Chrome, and you get to the point that many/most devices out there with a front facing camera have crappy camera quality. This causes most video sessions from home look bad if you compare them to enterprise grade room systems.

Google being a software company, are looking for improving this by software. That’s what improved video noise reduction is all about.

MediaStreamRecorder

The ability to record the stream locally in the browser. Not sure to what purpose, when everything gets migrated to the cloud (especially storage).

That said, developers have requested for this capability.

QUIC Data Channel POC

This one is interesting. Really interesting. QUIC is a new transport protocol, aiming to replace TCP in some ways. It is something experimental that Google is running below SPDY (their replacement/experimental protocol for HTTP 1.1).

SPDY will probably be decommissioned some time after HTTP 2.0 gets standardized, and assuming it fits the needs of Google.

QUIC may take the same route and for Google having it in WebRTC is just another grand experiment as opposed to a real replacement for SCTP in the data channel.

The reason for going for QUIC? Google aren’t happy with the performance they see in SCTP for the data channel and are looking for alternatives that can open up more use cases for the data channel.

If you are using the data channel in your service, I suggest you beef up your understanding and knowledge of QUIC.

APM Output Refactor

Refactoring WebRTC and other Chrome code…

It will be harder to take the WebRTC code in the future and repurpose it and port it on your own.

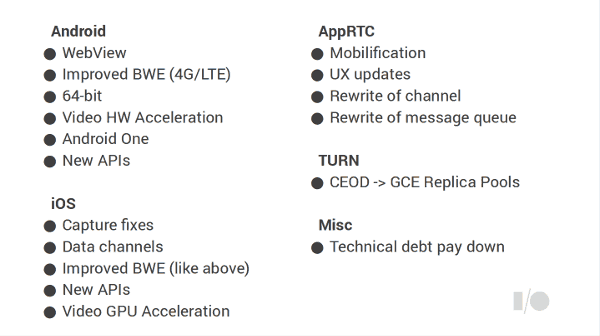

By Project Stream

Android

WebView

Android L (the upcoming release) will support WebRTC inside WebView.

This means that if you want to write an app by using HTML5 and wrapping it, then your HTML5 code will now be able to make use of WebRTC APIs on Android. It will make it a lot more accessible to mobile developers (on Android).

Improved BWE (4G/LTE)

Networks behave differently. So any optimizations done for Ethernet, ADSL or WiFi (3 different optimizations) won’t make a difference for LTE. This is why there’s attention to LTE separately as well here.

While focus is Android, my guess is that the code here will be good for iOS or any other LTE device.

64-bit

64 bit is all the rage. Apple came out first with 64 bit support, and Android is following suite. Chrome is getting 64 bit and now Android.

WebRTC needs to be able to run in 64 bit as well. This requires some (or much) porting, optimization and testing effort on behalf of Google.

Video HW Acceleration

Hardware acceleration is painfully missing for WebRTC’s VP8 codec. This is going to get a remedy in the code. It will improve quality and performance a lot.

Android One

Android One is Google’s new initiative on getting all Android devices aligned on the same code and UX. WebRTC is going to be a first class citizen there.

It is important for those who question the importance of WebRTC for Google (and their ongoing and future support of this technology).

New APIs

This is about aligning the Java APIs in Android with the WebRTC APIs. Should make it easier for those wishing to write native apps for Android and using WebRTC.

iOS

iOS may be irrelevant for Google, but they still need and want to support it. Some reasons why:

- Google Hangouts which uses WebRTC needs to run on iOS

- For developers to adopt WebRTC for mobile apps – they need a good starting point for iOS

There’s no real need to go over each and every one of the details – just to indicate that Google are taking iOS seriously and will provide something usable for developers.

AppRTC

AppRTC is Google’s sample application for WebRTC. It isn’t that good of a starting point today for WebRTC developers.

Google is planning a refresh, but I don’t think the result will still be satisfactory – it needs to change to a standalone solution instead of using Google’s App Engine for its backend.

TURN

CEOD -> GCE Replica Pools

This is about scaling up TURN servers on CEOD (https://github.com/juberti/computeengineondemand) and making this part of Google Compute Engine.

We’re still at a time where TURN is hard for developers. It needs to get simpler, and Google are aware of it.

What did we have?

- A lot of specification alignment. Google started by getting things running and then polishing the edges. It gets for faster development and shorter feedback loops from developers

- A lot of focus on user experience – from permissions to understanding of network and the local machine WebRTC runs on top

- Out with the old in with the new – VP9, QUIC – experiments on a grand scale to improve what we do, and to pave the way to what WebRTC will become

Google are continuing their heavy investment in WebRTC. While it is already usable and quite impressive, it is going to be harder with each release for those who continually FUD against this technology and its adopters to claim superiority.

–

You can watch the whole roadmap session by Google here:

Local stream recording is valuable if you’d like to be able to retain a copy without impacting on the real-time aspect or forcing the user through a media streamer (i.e. no P2P) – you can run the stream in real-time, record it local then shunt it to the store post-session where it will not have impact on the real-time bandwidth available.

Plus sometimes the user just wants to be able to save their own copy of course, in which case you save a lot of effort.

Point taken Richard. Thanks.

Nice post. Actually you can see the roadmap when looking at the peerconnection constraints and SDP exchanges in chrome’s webrtc-internals page, too. The overlap with your guesses is quite large.

— Improved BWE: I think that is the googLeakyBucket:true constraint, possibly also the googImprovedWifiBwe:true one

— CPU Adaptation: if you use hangouts in canary, you will see googCpuOveruseEncodeUsage:true, googCpuUnderuseThreshold:55, googCpuOveruseThreshold:85 peerconnection constraints.

— Improved permissions UX — see https://code.google.com/p/chromium/issues/detail?id=400285 for some of the problems they still need to solve. This won’t come in M37 (which my stable channel has already upgraded to)

— Improved RTX — yep, you can now see a=ssrc-group:FID for video in the SDP negotiation in M37. Also a=rtpmap:96 rtx/90000

lines in the SDP.

— Adaptive Opus FEC — apprtc shows how to enable that through SDP mangling:

https://github.com/GoogleChrome/webrtc/blob/master/samples/web/content/apprtc/js/main.js#L271

— RTPSender/Receiver APIs: those are unfortunately still missing even from Canary 🙁

— Audio output selection API — see the googDucking:false andchromeRenderToAssociatedSink:true flags in hangout. This shows the first signs, Navigator.getMediaDevices is targeted for M39 I think.

— IceTransport API: well… this is actually just to restrict the candidate types to relay ones. Doable in Javascript currently by dropping any onicecandidate event where the candidate is not of type relay. No new usecases.

— It will be harder to take the WebRTC code in the future and repurpose it and port it on your own.

It already got harder: https://groups.google.com/forum/#!topic/discuss-webrtc/QbC6KXWoaB4 — I am surprised nobody complained yet.

Philipp,

Thanks for putting these details here. This adds another perspective, which is the fact that a lot of the features we see in Google’s roadmap are derived from requests coming in from the Hangouts team (who are also testing the features before they are shared with everyone).