It must have been a fun week for Zoom. It showed the zoom vulnerability and why WebRTC is needed if you value security.

For those who haven’t followed the tech news, a week ago a serious vulnerability was publicly disclosed about Zoom by Jonathan Leitschuh. If you have a Mac and installed Zoom to join a meeting, then people could use web pages and links to force your machine to open up your Zoom client and camera. To make things worse, uninstalling Zoom was… impossible. That same link would forcefully reinstall zoom as well.

I don’t want to get into the details of the question of how serious the actual vulnerability that was found is, but rather want to discuss what got Zoom there, and to some extent, why WebRTC is the better technical choice.

If you are interested about security in general, then you can learn more about WebRTC security.

What caused the Zoom vulnerability?

the road to hell is paved with good intentions.

When the Zoom app installs on your machine, it tries to integrate itself with the browser, in an effort to make it really quick to respond. The idea behind it is to reduce friction to the user.

An installation process is usually a multistep process these days:

- You click a link on the browser

- The link downloads an executable file

- You then need to double click that executable

- A pop up will ask you if you are sure you want to install

- The installation will take place and the app will run

Anything can go wrong in each step along the way – and when things can get wrong they usually do. At scale, this means a lot of frustration to users.

I’ve been at this game myself. Before the good days of WebRTC, when I worked at a video conferencing company, this was a real pain for us. My company at the time developed its own desktop client, as an app that gets downloaded as a browser plugin. Lots of issues and bugs in getting this installed properly and removing friction.

These days, you can’t install browser plugins, so you’re left with installing an app.

Zoom tried to do two things here:

- If the Zoom app was installed, automate the process of running it from a web page

- If the Zoom app was not installed, try and automate the process of installing and running it

That first thing? Everyone tries to do it these days. We’re in removing friction for users – remember?

The second one? That’s something that people consider outrageous. You uninstall the Zoom app, and if you open a web page with a link to a zoom meeting it will go about silently installing it in the background for the user. Why? Because there’s a “virus” left by the Zoom installation in your system. A web server that waits for commands and one of them is installing the Zoom client.

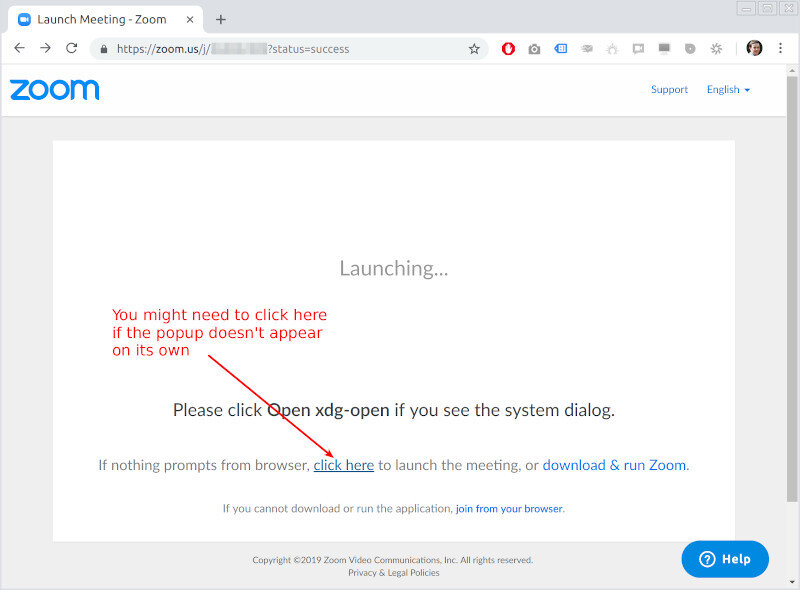

Here’s how joining a Zoom call looks on my Chrome browser in Linux:

The Zoom URL for joining a meeting opens the above window. Sometimes, it pops up a dialog and sometimes it doesn’t. When it doesn’t, you’re stuck on the page with either the need to “download & run Zoom” (which is weird, since it is already installed on my machine), “join from your browser” which we already know gives crappy quality or “click here”.

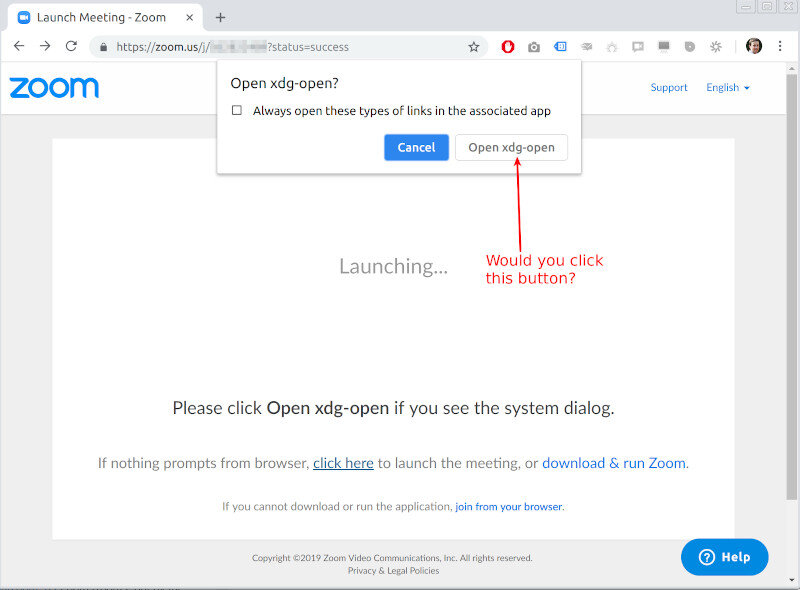

Since I am used to this weirdly broken behavior, I already know that I need to “click here”. This will bring about this lovely pop up:

This isn’t Zoom – it is Chrome opening a dialog of its own indicating that the browser page is trying to open a natively installed Linux application. It took me quite some time to decide to click that “Open xdg-open” button for these kinds of installed apps. For the most part, this is friction. Ugly friction at its best.

Does Google Chrome team cares? No. Why should they? Companies who want to take the experience out of the domain of the browser into native-land is something they’d prefer not to happen.

Does Zoom care? It does. Not on Linux apparently (otherwise, this page would have been way better in its explanation of what to do). But on Mac? It cares so much that it went above and beyond to reduce that friction, going as far as trying to hack its way around security measures set by the Safari team.

Is the Zoom vulnerability really serious?

Maybe. Probably. I don’t know.

It was disclosed as a zero-day vulnerability, which is considered rather serious.

The original analysis of the zoom vulnerability indicated quite a few avenues of attack:

- The use of an undocumented API on a locally installed web server

- Disguising the API calls as images to bypass and ignore a browser security policy

- Ability to force a user to join a meeting with a click of a link without further request for permissions. The user doesn’t need to even approve that meeting

- Ability to force a webcam to open in meeting on a click of a link without further request for permissions. The user doesn’t need to even approve that meeting

- Denial of service attack by forcing the Zoom app to open over and over again

- Silently installing Zoom if it was uninstalled

Some of these issues have been patched by Zoom already, but the thing that remains here is the responsibility of developers in applications they write. We will get to it a bit later.

While I am no security expert, this got the attention of Apple, who decided to automate the process and simply remove the Zoom web server from all Mac machines remotely and be done with it. It was serious enough for Apple.

Security is a game of cat and mouse

There are 3 main arm races going on in the internet these days:

- Privacy vs data collection

- Ads vs ad blockers (related to the first one)

- Hackers vs security measures

Zoom fell for that 3rd one.

Assume that every application and service you use has security issues and unknown bugs that might be exploited. The many data breaches we’ve had in the last few years of companies large and small indicate that clearly. So does the ransom attacks on US cities.

Unified communications and video conferencing services are no different. As video use and popularity grows, so will the breaches and security exploits that will be found.

There were security breaches for these services before and there will be after. This isn’t the first or the last time we will be seeing this.

Could Zoom or any other company minimize its exposure? Sure.

Zoom’s response to the vulnerability

My friend Chris thinks Zoom handled this nicely, with Eric Yuan joining a video call with security hackers. I see it more as a PR stunt. One that ended up backfiring, or at least not helping Zoom’s case here.

The end result? This post from Zoom, signed by the CEO as the author. This one resonates here:

Our current escalation process clearly wasn’t good enough in this instance. We have taken steps to improve our process for receiving, escalating, and closing the loop on all future security-related concerns

At the end, this won’t reduce the amount of people using Zoom or even slow Zoom’s growth. Users like the service and are unlikely to switch. A few people might heed to John Gruber’s suggestion to “eradicate it and never install it again”, but I don’t see this happening en masse.

Zoom got scorched by the fire and I have a feeling they’ll be doing better than most in this space from now on.

Zoom Competitor’s dancing moves

A few competitors of Zoom were quick to respond. The 3 that got to my email and RSS feed?

LogMeIn, had a post on the GoToMeeting website, taking this stance:

- “We don’t have that vulnerability or architectural problem”

- “We launch our app from the browser, but through the standard means”

- “Our uninstalls are clean”

- “We offer a web client so users don’t need to install anything if they don’t want to”

- “We’re name-dropping words like SOC2 to make you feel secure”

- “Here’s our security whitepaper for you to download and read”

Lifesize issued a message from their CEO:

- “Zoom is sacrificing security for convenience”

- “Their response is indefensibly unsatisfactory

- “Zoom still does not encrypt video calls by default for the vast majority of its customers”

- “We take security seriously”

Apizee decided to join the party:

- “We use WebRTC which is secure”

- “We’re doing above and beyond in security as well”

The truth? I’d do the same if I were a competitor and comfortable with my security solution.

The challenge? Jonathan Leitschuh or some other security researcher might well go check them up, and who knows what they will find.

Why WebRTC improves security?

For those who don’t know, WebRTC offers voice and video communications from inside the browser. Most vendors today use WebRTC, and for some reason, Zoom doesn’t.

There are two main reasons why WebRTC improves security of real time communication apps:

- It is implemented by browser vendors

- It only allows encrypted communications

Many have complained about WebRTC and the fact that you cannot send unencrypted media with it. All VoIP services prior to WebRTC run unencrypted by default, adding encryption as an optional feature.

Unencrypted media is easier to debug and record, but also enable eavesdropping. Encrypted media is thought to be a CPU hog due to the encryption process, something that in 2019 needs to be an outdated notion.

When Zoom decided not to use WebRTC, they essentially decided to take full responsibility and ownership of all security issues. They did that from a point of view and stance of an application developer or maybe a video conferencing vendor. They didn’t view it from a point of view of a browser vendor.

Browsers are secured by default, or at least try to be. Since they are general purpose containers for web applications that users end up using, they run with sandboxed environments and they do their best to mitigate any security risks and issues. They do it so often that I’d be surprised if there are any other teams (barring the operating system vendors themselves) who have better processes and technologies in place to handle security.

By striving for frictionless interactions, Zoom came headon into an area where browser vendors handle security threats of unknown code execution. Zoom made the mistake of trying to hack their way through the security fence that the Safari browser team put in place instead of working within the boundaries provided.

Why did they take that approach? Company DNA.

Zoom “just works”, or so the legend goes. So anything that Zoom developers can do to perpetuate that is something they will go the length to do.

WebRTC has a large set of security tools and measures put in place. These enables running it frictionlessly without the compromises that Zoom had to take to get to a similar behavior.

Interesting? Learn more about WebRTC security.

Where may WebRTC fail?

There are several places where WebRTC is failing when it comes to security. Some of it are issues that are being addressed while others are rather debatable.

I’d like to mention 4 areas here:

#1 – WebRTC IP leak

Like any other VoIP solution, WebRTC requires access to the local IP addresses of devices to work. Unlike any other VoIP solution, WebRTC exposes these IP addresses to the web application on top of it in JavaScript in order to work. Why? Because it has no other way to do this.

This has been known as the WebRTC IP leak issue, which is a minor issue if you compare it to the Zoom zero day exploit. It is also one that is being addressed with the introduction of mDNS, which I wrote about last time.

A few months from now, the WebRTC IP leak will be a distant problem.

I also wouldn’t categorize it as a security threat. At most it is a privacy issue.

#2 – Default access to web camera and microphone

When you use WebRTC, the browser is going to ask you to allow access to your camera and microphone, which is great. It shows that users need to agree to that.

But they only need to agree once per domain.

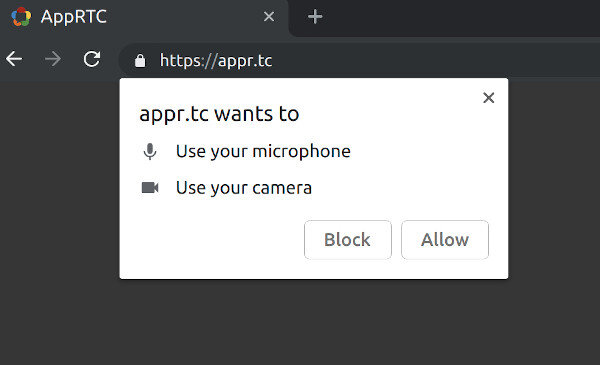

Go to the Google AppRTC demo page. If it is the first time you’re using it, it will ask you to allow access to your camera and microphone. Close the page again and reopen – and it won’t ask again. That’s at least the behavior on Chrome. Each browser takes his own approach here.

Clicking the Allow button above would cause all requests for camera and microphone access from appr.tc to be approved from now on without the need for an explicit user consent.

Is that a good thing? A bad thing?

It reduces friction, but ends up doing exactly what Jonathan Leitschuh complained about with Zoom as well – being able to open a user’s webcam without explicit consent just by clicking on a web link.

This today is considered standard practice with WebRTC and with video meetings in general. I’d go further to say that if there’s anything that pisses me off, it is video conferencing services that makes you join with muted video requiring me to explicitly unmute my video.

As I said, I am not a security expert, so I leave this for you to decide.

#3 – Ugly exploits

Did I say a cat and mouse game? Advertising and ad blockers are there as well.

Advertisers try to push their ads, sometimes aggressively, which brought into the world the ad blockers, who then deal with cleaning up the mess. So advertisers try to hack their way through ad blockers.

Since there’s big advertising money involved, there are those who try to game the system. Either by using machines to automate ad viewing and clicking to increase revenue, getting real humans in poor countries to manually click ads for the same reason or just inject their own code and ads instead of the ads that should have appeared.

That last one was found using WebRTC to inject its code, by placing it in the data channel. There’s some more information on the DEVCON website. Interestingly, this exploit works best via Webview inside apps like Facebook that open web pages internally instead of through the browser. It makes it a lot harder to research and find in that game of cat and mouse.

I don’t know if this is being addressed at all at the moment by browser vendors or the standards bodies.

#4 – Lazy developers

This is the biggest threat by far.

Developers using WebRTC who don’t know better or just assume that WebRTC protects them and do their best to not take responsibility on their part of the application.

Remember that WebRTC is a building block – a piece of browser based technology that you use in your own application. Also, it has no signaling protocol of its own, so it is up to you to decide, implement and operate that signaling protocol yourself.

Whatever you do on top of WebRTC needs to be done securely as well, but it is your responsibility. I’ve written a WebRTC security checklist. Check it out:

Why isn’t Zoom using WebRTC?

Zoom was founded in 2011.

WebRTC was just announced in 2011.

At the time it started, WebRTC wasn’t a thing.

When WebRTC became a thing, Zoom were probably already too invested in their own technology to be bothered with switching over to WebRTC.

While Zoom wanted frictionless communications for its customers, it probably had and still has to pay too big a price to switch to WebRTC. This is probably why when Zoom decided to support browsers directly with no downloads, they went for WebAssembly and not use WebRTC. The results are a lot poorer, but it allowed Zoom to stay within the technology stack it already had.

The biggest headaches for Zoom here is probably the video codec implementation. I’ll take a guess and assume that Zoom are using their own proprietary video codec derived from H.264. The closest indication I could find for it was this post on the Zoom website:

We have better coding and compression for our screen sharing than any other software on the market

If Zoom had codecs that are compatible with WebRTC or that can easily be made compatible with WebRTC they would have adopted WebRTC already.

Zoom took the approach of using this as a differentiator and focusing on improving their codecs, most probably thinking that media quality was the leading factor for people to choose Zoom over alternative solutions.

Where do we go from here?

It is 2019.

If you are debating using WebRTC or a proprietary technology then stop debating. Use WebRTC.

It will save you time and improve the security as well as many other aspects of your application.

If you’re still not sure, you can always contact me.

Mind you that after muting your own camera in a webrtc app, the app has the ability to unmute it without needing confirmation (i.e. a button click) from the user.

Could a WebRTC app implement a remote unmute feature? Totally…

This sounds really fishy and strange. Zoom actually created their own zero-day vulnerability, which now Apple remotely removes from users computers, how is that possible?

Well… they didn’t intentionally create zero-day vulnerability. They created a feature that could only work architecturally if they hacked they way through Apple’s Safari sandbox. That, in turn, was implemented in a way that was vulnerable to different types of attacks.

The publicity of this, along with the potential security risk, led Apple to remove the app remotely.

Hacking is about making money, where’s the money to be made with having someone join a video meeting

John,

That’s one definition of hacking, which is mostly related to security breaching and not money – at least not directly.

Hacking is about finding your way into a software system in ways that aren’t documented, expected or supported. It is at least what I meant in that use of the word in this article.

Great Post!

Great post, thanks for sharing. From my understanding and research, Zoom are using H.264 SVC as their codec.

please help while using ZOOM a message always appear YOUR CPU IS AFFECTING THE QUALITY OF THE MEETING why?

Pattie,

You should reach out to Zoom and ask them.

Soooo this was written before the explosion of Zoom use…and subsequent bad pr over the security issues…yet they haven’t really made the switch?

Your article has confirmed for me that I want to keep my Chromebook..I’ve loved them for nearly a decade but was super frustrated by the limited ability to use Zoom. It is for my own good!