You will need to decide what is more important for you – quality or latency. Trying to optimize for both is bound to fail miserably.

[In this list of short articles, I’ll be going over some WebRTC related quotes and try to explain them]

First thing I ask people who want to use WebRTC for a live streaming service is:

What do you mean by live?

This is a fundamental question and a critical one.

If you search Google, you will see vendors stating that good latency for live streaming is below 15 seconds. It might be good, but it is quite crappy if you are watching a live soccer game and your neighbors who saw the goal taking place 15 seconds before you did are shouting.

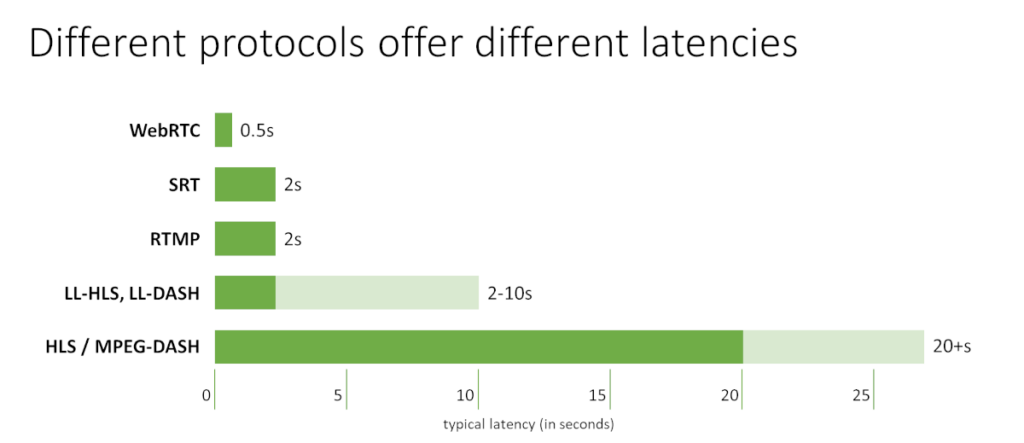

I like using the diagram above to show the differences in latencies by different protocols.

WebRTC leaves all other standards based protocols in the dust. It is the only true sub-second latency streaming protocol. It doesn’t mean that it is superior – just that it has been optimized for latency. And in order to do that, it sacrifices quality.

How?

By not retransmitting or buffering.

With all other protocols, you are mostly going to run over HTTPS or TCP. And all other protocols heavily rely on retransmissions in order to get the complete media stream. Here’s why:

- Networks are finicky, and in most cases, that means you will be dealing with packet losses

- You stream a media file over the internet, and on the receiving end, parts of that file will be missing – lost in transmission

- So you manage it by retransmission mechanisms. Easiest way to do that is by relying on HTTPS – the main transport protocol used by browsers anyways

- And HTTPS leans on TCP to offer reliability of data transmission, which in turn is done by retransmitting lost packets

- Retransmissions require time, which means adding a buffering mechanism to make room for it to work and provide a smooth viewing experience. That time is the latency we see ranging from 2 seconds up to 30 seconds or more

WebRTC comes from the real time, interactive, conversational domain. There, even a second of delay is too long to wait – it breaks the experience of a conversation. So in WebRTC, the leading approach to dealing with packet losses isn’t retransmission, but rather concealment. What WebRTC does is it tries to conceal packet losses and also make sure there are as little of it as possible by providing a finely tuned bandwidth estimation mechanism.

Looking at WebRTC itself, it includes a jitter buffer implementation. The jitter buffer is in charge of delaying playout of incoming media. This is done to assist with network jitter, offering smoother playback. And it is also used to implement lip synchronization between incoming audio and video streams. You can to some extent control it by instructing it not to delay playout. This will again hurt the quality and improve latency.

You see, the lower the latency you want, the bigger the technical headaches you will need to deal with in order to maintain high quality. Which in turn means that whenever you want to reduce latency, you are going to pay in complexity and also in the quality you will be delivering. One way or another, there’s a choice being made here.

👉 Looking to learn more on how to use WebRTC technology to build your solution? We’ve got WebRTC training courses just for that!

Thanks for sharing this insight !

I guess you meant 'by' instead of 'but' …

… not retransmitting or buffering.

Thanks for catching this Viktor – I fixed it now 🙂