From time to time, WebRTC is going to discard media packets. Monitoring such behavior and understanding the reasons is important to optimize media quality.

WebRTC does things in real time. That means that if something takes its sweet time to occur, it will be too late to process it. This boils down to the fact that from time to time, WebRTC will discard media packets, which isn’t a good thing. Why is that going to happen? There are quite a few reasons for it, which is what this article is all about.

Table of contents

A WebRTC Q&A

I just started a new initiative with Philipp Hancke. We’re publishing an answer to a WebRTC related question once a week (give or take), trying to keep it all below the 2 minutes mark.

We are going to cover topics ranging from media processing, through signaling to NAT traversal. Dealing with client side or server side issues. Or anything else that comes to mind.

👉 Want to be the first to know? Subscribe to the YouTube channel

👉 Got a question you need answered? Let us know

Discarded media packets in WebRTC

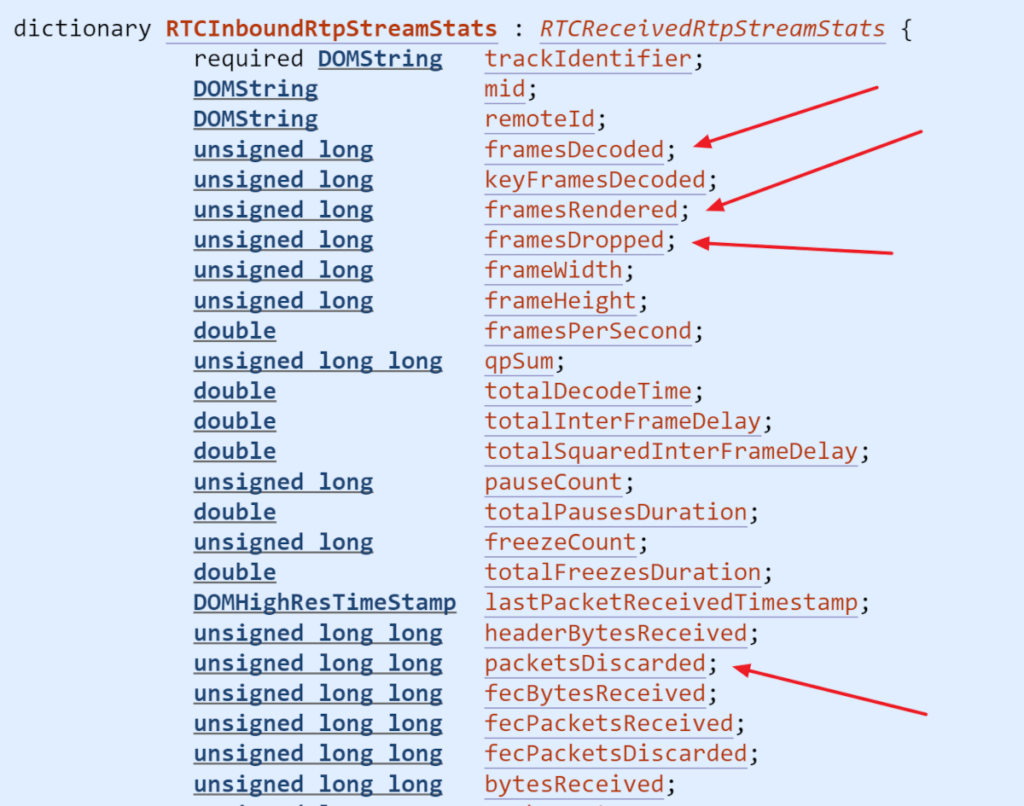

Media packets and frames can and are discarded by WebRTC in real life calls. There are even getstats metrics that allow you to track these:

The screenshot above was taken from the RTCInboundRtpStreamStats dictionary of getstats. I marked most of the important metrics we’re interested in for discarding media data.

packetsDiscarded – this field indicates any fields that the jitter buffer decided to discard and ignore because they arrived too early or too late. It relates to audio packets.

framesXXX fields are dealing with video only and look at full frames which can span multiple packets. They get discarded because of a multitude of reasons which we will be dealing with later in this article. For the time being – just know where to find this.

The diagram below is a screenshot taken in testRTC of a real session of a client. Here you can see a spike of 200 packetsDiscarded less than a minute into the call. We’ve recently added in testRTC insights that hunt for such cases (as well as for video frame drops), alerting about these scenarios so that the user doesn’t have to drill down and search for them too much – they now appear front and center to the user.

WebRTC = Real-Time. Timing is everything

WebRTC stands for Web Real Time Communication. The Real Time part of it is critical. It means that things need to happen in… real time… and if they don’t, then the opportunity has already passed. This leads to the eventuality that at times, media packets will need to be discarded simply because they aren’t useful anymore – the opportunity to use them has already passed.

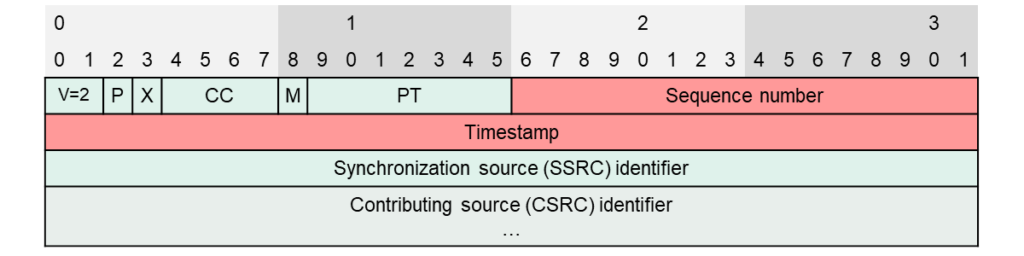

For all that logic to happen, WebRTC uses a protocol called RTP. This protocol is in charge of sending and receiving real time media packets over the network. For that to occur, each RTP packet has two critical fields in its header:

The illustration above is taken from our course Low level WebRTC protocols. In it, you can see these two fields:

- Sequence number

- Timestamp

The sequence number is just a running counter which can easily be used to order the packets on the receiving end based on the value of the counter. This takes care of any reordering, duplication and packet losses that can occur over modern networks.

The timestamp is used to understand when the media packet was originally generated. It is used when we need to playback this packet. Multiple packets can have the same timestamp for example, when the frame we want to send gets split across packets – something that occurs frequently with video frames.

These two, sequence number and timestamp, are used to deal with the various characteristics of the network. Usually, we deal with the following problems (I am not going to explain them here): jitter, latency, packet loss and reordering.

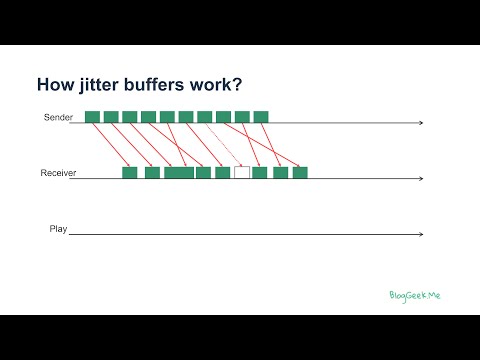

All of this goodness, and more is handled in WebRTC by what is called a jitter buffer. Here’s a short explainer of how a jitter buffer works:

WebRTC discarding incoming audio packets

The above video is our first WebRTC Q&A video. We started off with this because it popped up in discuss-webrtc. The question has since been deleted for some reason, but it was a good one.

Latency

The main reason for discarded audio packets is receiving them too late.

When audio packets are received by WebRTC, it pushes them into its jitter buffer. There, these packets get sorted in their sending order by looking at the sequence number of these packets. When to play them out is then dependent on the timestamp indicated in the packet.

Assuming we already played a newer packet to the user, we will be discarding packets that have a lower (and older) sequence number since their time has already passed.

Lipsync

Audio and video packets get played out together. This is due to a lip synchronization mechanism that WebRTC has, where it tries to match timestamps of audio and video streams to make sure there’s lip synchronization.

Here, if the video advanced too much, then you may need to drop some audio packets instead of playing them out in sync with the video (simply because you can’t sync the two anymore).

Bugs

Here’s another reason why audio packets might end up being discarded by the receiver – bugs in the sender’s implementation…

When the sender doesn’t use the correct timestamp in the packets, or does other “bad” things with the header fields of the RTP packets, you can get to a point when packets get discarded.

👉 Our focus here was on the timestamp because for some arcane reasons, figuring out the timestamp values and their progression in audio (and video) is never a simple task. Audio and video use different frequency clocks when calculating timestamps, done with values that make little sense to those who aren’t dealing with the innards and logic of audio and video encoders. This may easily lead to miscalculations and bugs in timestamp setting

WebRTC discarding outgoing audio packets

This doesn’t really happen. Or at least WebRTC ignores this option altogether.

How do we know that? Besides looking at the code, we can look at the fields that we have in getstats for this. While we have discarded frames for incoming and outgoing video and discarded incoming audio packets, we don’t have anything of this kind for outgoing audio packets.

These packets are too small and “insignificant” to cause any dropping of them on the sender side. That’s at least the logic…

WebRTC discarding incoming video frames

Before we go into the reasons, let’s understand how video packets are handled in the media processing pipeline of WebRTC. This is partial at best, and specifically focused on what I am trying to convey here:

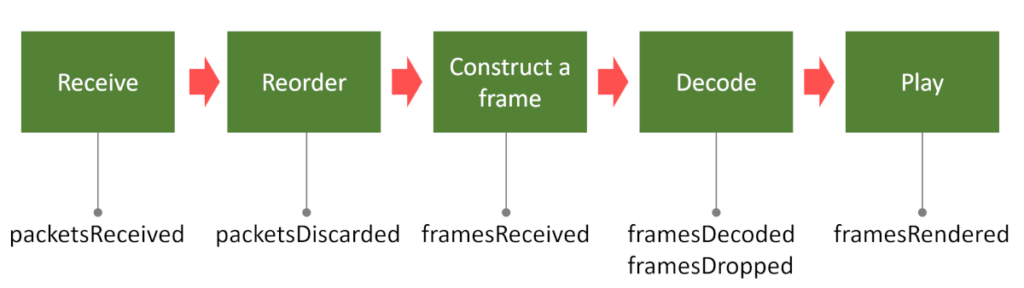

The above diagram shows the process that video packets go through once they are received, along with the metrics that get updated due to this processing:

- It starts with the video packets being Received from the network

- They then get Reordered as they get inserted into the jitter buffer. Here, the jitter buffer may discard packets. In the case of video packets though, don’t expect packetsDiscarded to be updated properly

- For video, we now construct frames, taking multiple packets and concatenating them into frames in Construct a frame. This also gives us the ability to count the framesReceived metric

- Once we have frames, WebRTC will go ahead and Decode them. Here, we end up counting framesDecoded and framesDropped

- Now that we have decoded frames, we can Play them back and indicate that in framesRendered

👉 The exact places where these metrics might be updated are a wee bit more nuanced. Consider the above just me flailing my hands in the air as an explanation.

This also hints that with video, there are multiple places where things can get dropped and discarded along the pipeline.

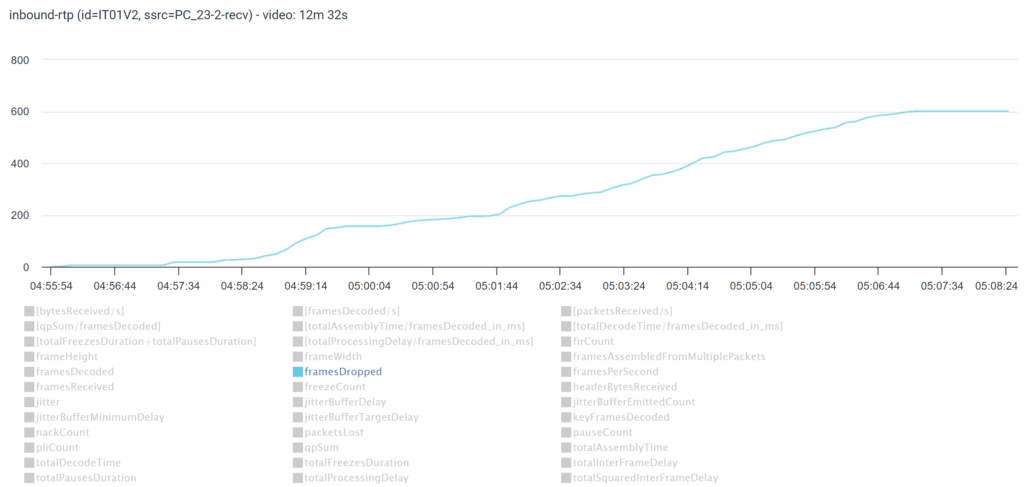

The above is another screenshot from testRTC. This time, indicating framesDropped. You can see how throughout the session, quite a few frames got dropped by WebRTC.

Let’s find the potential reasons for such dropped frames..

Latency, lip sync & bugs

Just like incoming audio packets, we can get dropped packets and video frames because of much the same reasons.

Latency and lip synchronization may cause the jitter buffer to discard video packets.

And bugs on the sender side can easily cause WebRTC to drop incoming packets here as well.

That said, with video, we have to look at a slightly bigger picture – that of a frame instead of that of a singular packet.

Not all packets of a frame are available

Assume you have a packet dropped. And that packet is part of a frame that is sent over a series of 7 packets. We had 1 packet drop that caused a frame drop, which in turn, caused another 6 packets to be useless to us since we can’t really decode them without the missing packet (we can to some extent, but we usually don’t these days).

Dependency on older frames

With video, unless we’re decoding a keyframe, the frame we need to decode requires a previous frame to be decoded. There are dependencies here since for the most part, we only encode and compress the differences across frames and not the full frame (that would be a keyframe).

What happens then if a frame we need for decoding a fresh frame we just received isn’t available? Here, all packets were received for this new frame, but the frame (and all its packets) will still get dropped. This will be reported in framesDropped.

Not enough CPU

We might not have enough CPU available to decode video. Video is CPU intensive, and if WebRTC understands that it won’t have time to decode the frame, it will simply drop it before decoding it.

But, it might also decode the frame, but then due to CPU issues, miss the time for playout, causing framesRendered not to increment.

WebRTC discarding outgoing video frames

With outgoing media, there is a different dictionary we need to look at in getstats – RTCOutboundRtpStreamStats:

Here, the relevant fields are framesSent and framesEncoded. We should strive to have these two equal to each other.

We know that WebRTC decided to discard frames here if framesEncoded is higher than framesSent. If this happens, then it is bad in a few levels:

- Encoding video is a resource intensive process. If we took the effort to encode a frame and didn’t send it in the end, then we’ve wasted resources. To me this means something is awfully wrong with the implementation and it isn’t well balanced

- Video frames are usually dependent on one another. Dropping a frame may lead to future frames that the receiver will be unable to decode without the frame that was dropped

- Such failures are usually due to network or memory problems. These hint towards a deeper problem that is occurring with the device or with the way your application handles the resources available on the device

On the RTCIceCandidatePairStats dictionary, there’s also packetsDiscardedOnSend metric, which hints to when and why would we lose and discard packets and frames on the sender side:

Total number of packets for this candidate pair that have been discarded due to socket errors, i.e. a socket error occurred when handing the packets to the socket. This might happen due to various reasons, including full buffer or no available memory.

If you’re dropping video frames on the sender side (framesEncoded < framesSent), then in all likelihood the network buffer on the device is full, causing a send failure. Here you should check the resources available on the device – especially memory and CPU – or just understand the network traffic you are dealing with.

Maintaining media quality in WebRTC

Media quality in WebRTC is a lot more than just dealing with bitrates or deciding what to do about packet losses. There are many aspects affecting media quality and they all do it dynamically throughout the session and in parallel to each other. Some of these I covered when discussing fixing packet loss in WebRTC.

This time, we looked into why WebRTC discards media packets during calls. We’ve seen that there are many reasons for it.

To learn more about media processing and everything else related to WebRTC, check out these services: