Parallax, or eye contact in video conferencing is a problem that should be solved, and AI is probably how we end up solving it.

I’ve been working at a video conferencing company about 20 years ago. Since then a lot have changed:

- Resolutions and image quality have increased dramatically

- Systems migrated from on prem to the cloud

- Our focus changed from large room systems, to mobile, to desktop and now to huddle rooms

- We went from designed hardware to running it all on commodity hardware

- And now we’re going after commodity software with the help of WebRTC

One thing hasn’t really changed in all that time.

I still see straight into your nose or straight at your forehead. I can never seem to be able to look you in the eye. When I do, it ends up being me gazing straight at my camera, which is unnatural for me either.

The reason for this is known as the parallax problem in video conferencing. Parallax. What a great word.

If you believe Wikipedia, then “Parallax is a displacement or difference in the apparent position of an object viewed along two different lines of sight, and is measured by the angle or semi-angle of inclination between those two lines.”

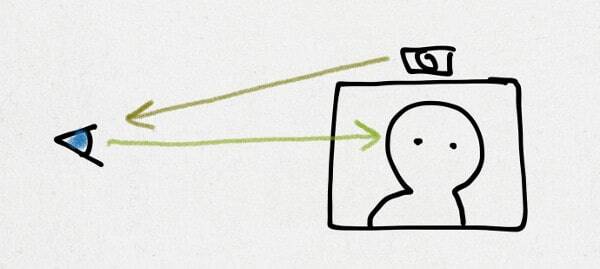

A mouthful. Let me illustrate the problem:

What happens here is that as I watch the eyes of the person on the screen, my camera is capturing me. But I am not looking at my camera. I am looking at an angle above or beyond it. And with a group call with a couple of people in it in Hollywood squares, who should I be looking at anyway?

So you end up with either my nose.

Or my forehead.

What we really want/need is to have that camera right behind the eyes of the person we’re looking at on our display – be it a smartphone, laptop, desktop or room system.

Over the years, the notion was to “ignore” this problem as it is too hard to solve. The solution to it usually required the use of mirrors and an increase in the space the display needed.

Here’s an example from a failed kickstarter project that wanted to solve this for tablets – the eTeleporter:

The result is usually cumbersome and expensive. Which is why it never caught on.

There are those who suggest tilting the monitor. This may work well for static devices in meeting rooms, but then again, who would do the work needed, and would the same angle work on every room size and setup?

When I worked years ago at a video conferencing company, we had a European research project we participated in that included 3D imaging, 3D displays, telepresence and a few high end cameras. The idea was to create a better telepresence experience that got eye contact properly as well. It never saw the light of day.

Today, multiple cameras and depth sensors just might work.

Let’s first take it to the extreme. Think of Intel True View. Pepper a stadium with enough cameras, and you can decide to synthetically re-create any scene from that football game.

Since we’re not going to have 20+ 5K cameras in our meeting rooms, we will need to make do with one. Or two. And some depth information. Gleaned via a sensor, dual camera contraption or just by using machine learning.

Which is where two recent advancements give a clue to where we’re headed:

- Apple Memoji (and earlier Bitmoji). iPhone X can 3D scan your face and recognize facial movements and expressions

- Facebook can now open eyes in selfie images with the help of AI

The idea? Analyze and “map” what the camera sees, and then tweak it a bit to fit the need. You won’t be getting the real, raw image, but what you’ll get will be eye contact.

Back to AI in RTC

In our interviews this past month we’ve been talking to many vendors who make use of machine learning and AI in their real time communication products. We’ve doubled down on computer vision in the last week or two, trying to understand where is the technology today – what’s in production and what’s coming in the next release or two.

Nothing I’ve seen was about eye contact, and computer vision in real time communication is still quite nascent, solving simpler problems. But you do see the steps taken towards that end game, just not from the video communication players yet.

Interested in AI and RTC? Check out our upcoming report and be sure to assist us with our web survey (there’s an ebook you’ll receive and 5 $100 Amazon Gift cards we will raffle).

> Over the years, the notion was to “ignore” this problem as it is too hard to solve.

At least, marketing people did not ignore the problem…

They worked around it, at least in the screenshots of video conferencing solutions: they put photos of beautiful people looking straight into your eyes 🙂

I suppose this is Dev vs Marketing stuffs as usual 🙂

🙂

This problem has only gotten worse in the past 3 years, especially with Smartphones! The problem is they use the 'selfie' mode which shows your video. Unfortunately, most people will 'talk to the screen' and not the lens!

My solution: turn the phone around and use in regular mode.