For me, Kranky Geek 2018 was a tremendously fun experience.

We had our fourth Kranky Geek event in San Francisco last week. As usual, it is a nerve wrecking experience up until the point it ends. And it doesn’t start on the day of the event itself – we’ve been busy with content curation, handling presentation drafts and doing dry runs for a few weeks.

The result is quite satisfying. We’ve decided this time to dig even deeper into the domain of artificial intelligence and machine learning and its role in real time communications. As I’ve been saying, WebRTC is ready – so what would be the point of doing an event about WebRTC? We have a lot of WebRTC topics already covered from our past events – and they are all available in the Kranky Geek YouTube channel.

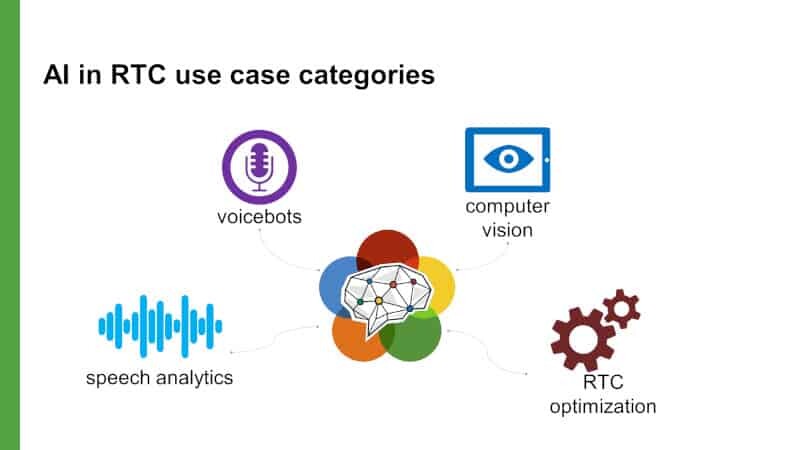

The way we see it, there are 4 domains we had to cover: speech analytics, voicebots, computer vision and RTC optimization.

So we went hunting for the event. In the end, we were able to cover all four domains and squeeze a few WebRTC specific topics as well.

The Sessions

This year, we had the biggest number of sessions. The event has become a full day event from a shorter one over the years. The people I talked to noted that the day was long and tiring, but somehow, almost everyone stayed to the end. Here’s what we had this year:

Our own welcome

One thing to note here – our AI in RTC report got a promotional discount of ~33%, which will be available until the end of the month. If this space interests you, then definitely check it out.

Discord

Discord operates a large chat operation for gamers. Part of that service includes voice and video calling. At peak, they handle 2.8 million concurrent voice connections to their service.

What they shared, was the changes they have done to the vinyl WebRTC code base in order to fit their needs.

Facebook were kind enough to give a presentation around Facebook Portal – their new home device that is capable of handling video calls (using WebRTC of course). The device uses machine learning to track the people in the room during a call. They talked about the challenges that comes with automating the camera’s zoom and with connecting calls from Portal devices to mobile phones.

This was the first time they shared that information publicly at a conference.

Intel

Intel announced open sourcing their media server – the Intel Collaboration Suite for WebRTC – under the name of Open Media Streamer. They also shared information of svt-hevc, their open source HEVC encoder.

Voicebase

Voicebase talked about Paralinguistics – the way we speak as opposed to the words we are saying. They shared the path they took charting that space, and understanding what makes more sense or less sense in terms of value.

Voicera

Voicera discussed virtual assistants and how they need to understand transcriptions.

IBM

IBM explained the notion of voicebots and how it fits into contact centers. They explained the need to be able to handoff a voicebot to a human agent.

Nexmo

Nexmo showed a demo using Dialogflow, connected to a voice service for ordering a pizza. It stressed the need to be able to connect communication services to various machine learning ones.

Dialpad

Dialpad explained how to take an open source speech to text engine and add some custom words into it in order to improve the accuracy of the transcription.

Callstats

Callstats clustered the sessions they are collecting, trying to figure out by that information the type of call and root cause of issues it may have.

RingCentral

RingCentral normalized MOS scores of audio calls across its network and devices, to be able to give a clear indication of call quality – it appears that while there’s a standard specification for MOS, asking device manufacturers to follow it to the letter is rather challenging, so using machine learning they are “fixing” that issue.

Google talked about the current status and efforts in getting Chrome’s WebRTC implementation to 1.0 specification. It also shared the work being done to improve audio stability and performance in Chrome (lots of architecture changes in how devices get accessed in order to reduce the number of threads used and get a stable delay model for its acoustic echo canceller). There was also a look at what goes after 1.0 – WebRTC NV and what role may WebAssembly play there (I’ll write more about it in the future).

Agora

Agora showed how they use super resolution to improve video quality in calls, and what it means to run super resolution on a mobile device.

Houseparty

Houseparty used machine learning to improve video quality as well, taking a different approach. They shared the work they are doing and the effort it takes to bring it to production.

Microsoft

Microsoft shared the work done on WebRTC on UWP and explained how AR/VR fits into the story and the enterprise use cases they are seeing in the market.

Session Recordings

As always, all the sessions were recorded and are available online.

Kranky Geek in 2019

Every year we’ve done a Kranky Geek event, we came in with the notion that this is the last one. Not sure why, but that was always the case. Then about 9 months after the event, we started discussing with Google about the next event.

We’ve changed that this time. We are going to do an event in 2019, and we have a name for it:

Kranky Geek SF 2019

We have a tentative date for the event: November 15, 2019

Put it in your calendar.

We don’t yet know what the theme for next year will be, but I have a hunch that it will include WebRTC and machine learning 🙂

If you want to speak – contact me

If you want to sponsor – contact me

If you have feedback on what we should improve – you know – contact me

Oh – and if you are interested in AI in WebRTC, check out our report – there’s a discount available for it until the end of the month.

you are getting fat… but still handsome.

haha

and here I thought I was losing weight… but still as ugly as ever 🤣