Here’s how you broadcast using WebRTC.

This is the second post dealing with multipoint:

- Introduction

- Broadcast (this post)

- Small groups

- Large groups

–

If you are developing a WebRTC service that requires broadcasting, then there are several aspects you need to consider. First off, don’t assume you will be able to broadcast directly from the browser – doing so isn’t healthy for several reasons.

Let’s start with an initial analysis of the various resources required for sending media over WebRTC:

- Camera acquisition, where the browser grabs raw data from the camera

- Video encoding, where the browser encodes the raw video data. This part is CPU intensive, especially now when there’s no hardware acceleration available for the VP8 video codec

-

Sending the encoded packets over the network, where there are two resources being consumed:

- Bandwidth, on the uplink, where it is usually scarce

- For lack of a better term, network driver

The first two – camera and encoding is done once, so it takes the same amount of effort as P2P.

The third one gets multiplied the number of endpoints you wish to reach with your broadcast. As there is no real way to use multicast, what is used is multi-unicast – each receiving side gets its own media stream, and has its own open session with our broadcaster.

While home broadband can enable you to get multiple such sessions and “broadcast” them, it won’t scale up enough, and it definitely won’t work for mobile handsets: there it will eat up battery life as well as your data plan, assuming it works at all.

The above are rather easy to solve – we haven’t even dealt with the issue of different capabilities by different receiving clients.

For this reason, when planning on broadcasting, you will need to stick with a server-side solution.

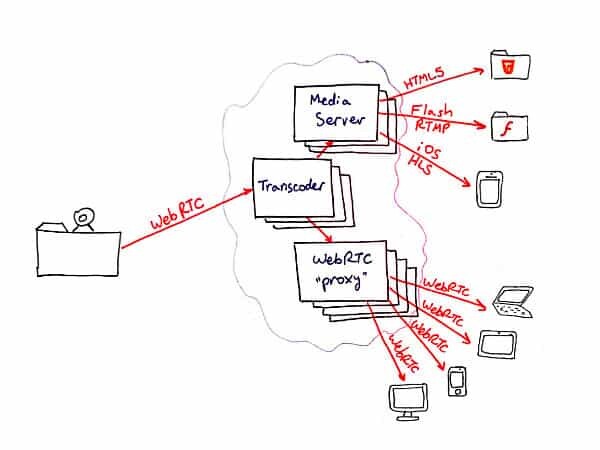

Here’s how a simplified architecture for broadcasting will look like (I’ve ignored signaling for the sake of simplicity, and because WebRTC ignores it):

The things to note:

- Client-side broadcaster sends out a single media stream to the server, who takes care of the heavy lifting here.

-

The server side does the work of “multiplying” the media stream into streams in front of each participant. And with it, it will have an open, active session. This can either be simple, or nasty, depending on how smart you want to be about it:

- Easiest way, the server acts as a proxy, not holding a real “session” in front of each client – just forwarding whatever it receives to the clients it serves. Easy to do, costs only bandwidth, but not the best quality achievable

- You can decide to transcode and change resolution, frame rate, bitrate – whatever in front of each receiver. Here it is also relatively easy to hold context in front of each receiver, providing the best quality

- You can do the above to generate several bit streams that fit different devices (laptops, tablets, smartphones, etc), and then send out that data as a proxy

- You can maintain sessions in front of each client, but not delve too deep into the packets – give a bit better quality, but at a reasonable cost

- The server side is… more than a single server – in most cases. Either because it is easier to scale, or because it is better to keep the transcoders and the proxies separated in terms of the design itself.

- While broadcasting, you might want to consider supporting non-WebRTC clients – serving pure HTML5 video, Flash or iOS devices natively. This means another gateway function is required here.

If you plan on broadcasting, make sure you know where you are headed with it – map your requirements against your business model to make sure it fits there – bandwidth and the computing power required for these tasks doesn’t come cheap.

Hi Tsahi – Interesting post and I agree with your approach. Couple of follow-up questions for you:

1. Are you aware of any platforms or platforms-as-a-service that are capable of performing all 3 of the functions in your diagram (media server, transcoding, and webrtc proxy) for all of the endpoint / codec combos implied in this diagram? I know there are options for WebRTC-WebRTC but as soon as you bring in the other types of flows in the upper right, it starts becoming more fun.

2. In your reference to signaling, when you say “…WebRTC ignores it”, I suppose this is technically accurate but would you agree that any sort of RTC app that leverages WebRTC technology has to have some form of signaling (SIP over Websockets, REST/Comet, etc.) in order to function properly in the mixed endpoint environment you show in your diagram?

Thanks,

Jim

Jim,

These are both great questions.

For the first one – I know of no such solution available today in PaaS mode. You can find bits and pieces of it, and my guess is that there’s some real business here. Haven’t done the ROI calculation for it, but seems like something that has real value in it.

For the second question, I’d refrain from placing any SIP signaling on top of such an infrastructure. I’d leave it as simple and generic as possible and try to use REST on top of it. This will make it suitable for anyone who wants to plug it into his own solution.

Hi Tsahi – Yeah, I tend to agree with you on the solution availability and do agree on the validity of the business opportunity.

Regarding SIP signaling vs. REST, it is my observation (from my position at Acme Packet) that many of the telcos that currently have SIP-based offerings today happen to like the idea of WebRTC signalled via SIP over WebSockets. This is because they use SIP in all of their backend call control / auth systems. So, I think there is demand for gateway solutions that take SIP over WebSockets from a WebRTC endpoint and interwork it to “standard” SIP that is compatible with a IMS core. Of course, it’s also important to provide signaling gateway functions for REST to “standard” SIP. I’m not taking the position that SIPoWS is better or worse than REST – it’s just that I think there is demand for both.

In closing, my “dream” solution (platform or PaaS) for the WebRTC opportunity is one that can not only address the media-related challenges described in your original post but the signaling-related ones as well.

Thanks,

Jim

Hi,

I Tried to use the WebRTC for one to many video conferencing it is working fine over the LAN.The Problem is it is not working

when trying to access it from the internet by removing the LAN.Please do suggest,it would be much helpful if replied the solution

Thanks

Namdevreddy

I think the issue isn’t multipoint but rather NAT traversal support. Make sure the implementation you are trying out supports TURN. Also check in less restrictive environments, as some enterprises today block all UDP traffic, which is the most common way to deploy TURN today for WebRTC (some use TCP already, which makes things easier).

I have the same problem, works great in LAN but when it comes to the internet only 10 machines can receive the broadcaster video with some delays, I have installed open source turn server in my server.

@ Mano

I agree WebRTC Video works fine on LAN….Have you found a solution to transmit video live feed to remote machines….Thank you

This is my dream architecture.

Licode does part of this but misses the “non-webrtc devices gateway” part.

Are you aware of any server that does it today ?

OpenTok.

That would be one option for those who don’t want to implement it on their own 🙂

I see two general ways. First – with video mixing. Second – without mixing. First way is more interesting for professional conferences where number of participants is limited by several persons. This approach requires a lot of hardware CPU resources due to necessary transcoding before mixing.

The second way might be used for wide range of end-users and might be more popular as skype, however such approach will lose in quality due two reasons 1) Too many streams. If you have a conference with N users, each users should receive N-1 streams. So sum bandwidth will be N(N-1).

For example, if you are streaming 720p stream 1.5Mbps and have 5 users conference, it will be 5(5-1)*1.5 = 30 Mbps bandwidth.

Now compare with mixing mode: N*1.5 = 5*1.5 = 7.5Mbps

So, the resulting total BW difference is about 4 times!

2) NAT issues. Users has different NATs, firewalls, different network environment.

Regarding solutions:

I know MCU server for multipoint conferences and WebRTC and Broadcasting Server for one-to-many broadcasting: http://flashphoner.com/webrtc-online-broadcasting-from-ip-cameras-and-video-surveillance-systems/

Second solution does not use video mixing. About first solution, i’m not sure.

this is unicasting not broadcasting, pls don’t confuse the terms

I don’t think I am confusing anything.

You are probably referring to unicast versus multicast. Effectively, on WAN there is no multicast, so broadcast is implemented via unicast – streaming the data directly to each and every participant instead of “carpet-bombing” the network with multicast traffic.